Contents

“That went really dark, fast” has become a catchphrase and meme online, but for a lot of people, it’s the reality of the internet; The bullied. These are the people who are attacked for no other reason than existing online.

Bullies are poison to people and to digital platforms.

Every day, millions navigate social spaces designed for connection and sharing, yet these can swiftly become battlegrounds of harassment due to cyberbullying. This challenge mandates a proactive approach from those overseeing digital platforms to safeguard users of all ages and nurture an environment where positive interactions thrive.

So, let’s dive into the deep (and dark) end of the pool.

Cyberbullying has become a ubiquitous threat in our virtual communities, relentlessly targeting teenagers and adults alike for their beliefs, appearance, or other reasons.

This rise in digital harassment isn’t just alarming; it’s a clarion call for action. As a society, we are at a crossroads where the need to protect individuals online is more pressing than ever.

Businesses and platforms offering user-generated content hold a significant key to keeping users safe in the fight against cyberbullying. By understanding the nature of cyberbullying, acknowledging its impact, and implementing robust prevention strategies, we can create online environments where respect and safety are the norm, not the exception.

What is cyberbullying?

We often encounter the question, “What exactly is cyberbullying?” While many recognize it when they see it, articulating a clear definition can be challenging. The Cyberbullying Research Center defines cyberbullying as:

“Willful and repeated harm inflicted through the use of computers, cell phones, and other electronic devices.”

This definition, crafted to be straightforward and comprehensive and capture the core aspects of cyberbullying, reflects the commitment to clarity and precision in addressing online safety challenges.

Cyberbullying statistics

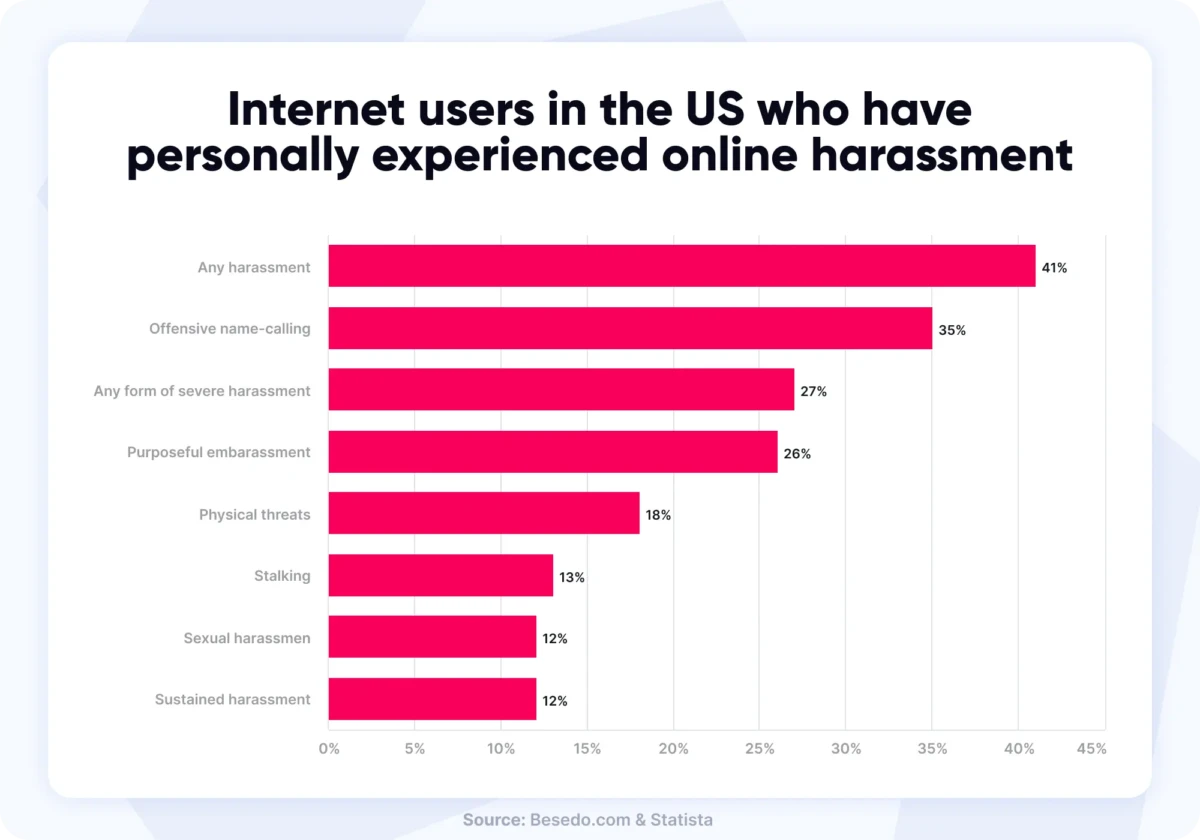

According to a study conducted by the Pew Research Center, approximately 41% of U.S. adults have personally experienced some form of online harassment, with 25% having experienced more severe forms of harassment.

Furthermore, another source suggests that nearly one in two internet users in the U.S. has encountered some form of online harassment, highlighting the significance of this issue.

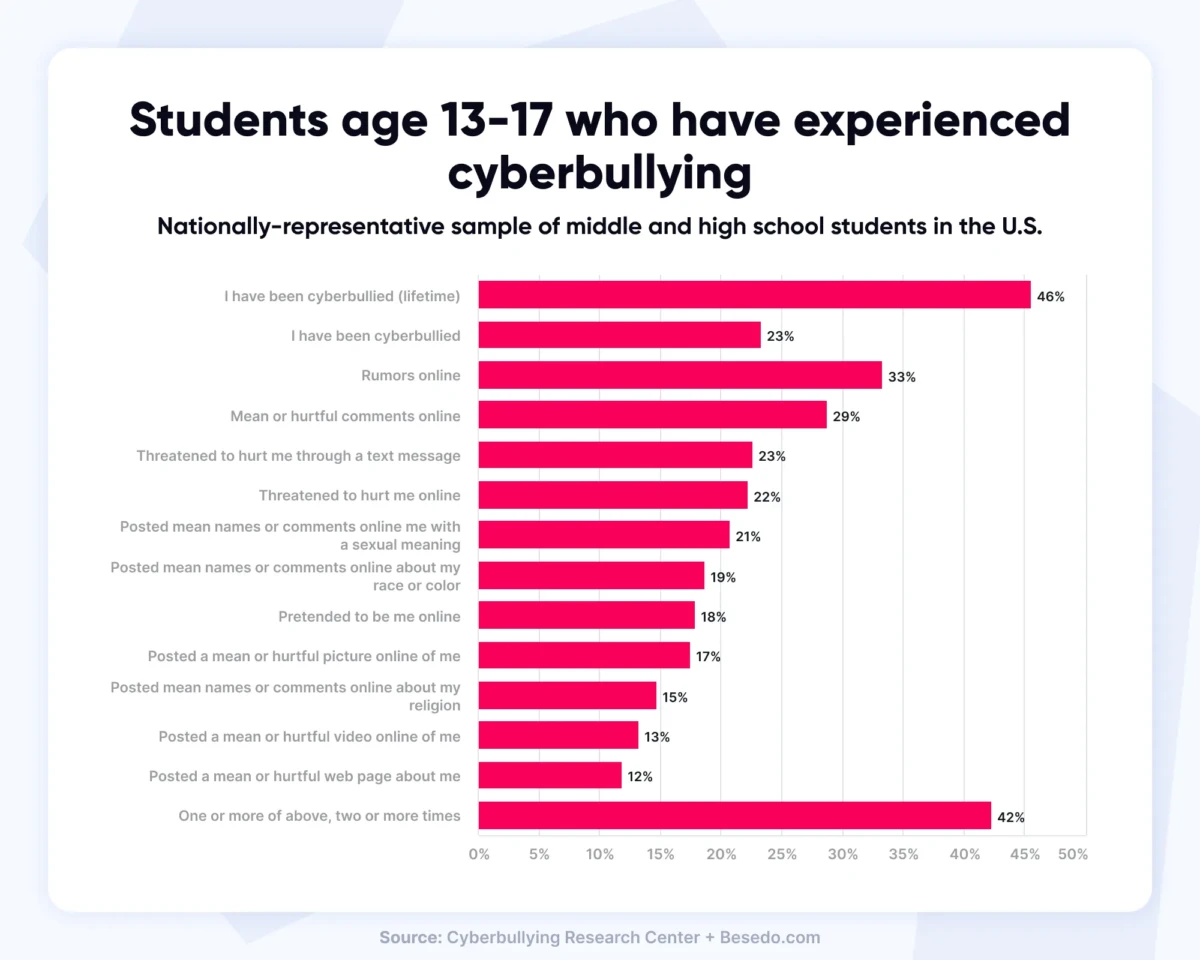

The prevalence of cyberbullying has escalated in recent years. Approximately half (46%) of all middle and high school students in America have experienced cyberbullying, with a significant spike during the COVID-19 pandemic lockdowns as online activity increased.

Cyberbullying among students is a significant concern, with various studies highlighting its widespread nature. Nearly half of all teenagers, about 46%, have experienced some form of cyberbullying, with the most common types being offensive name-calling, spreading false rumors, and receiving unsolicited explicit images.

Interestingly, older teen girls, mainly those aged 15 to 17, are more likely to have experienced cyberbullying compared to their male counterparts and younger teens. This group is also more prone to be targeted due to their physical appearance.

Cyberbullying and social media

Social media platforms are hotspots for cyberbullying. YouTube, Snapchat, TikTok, and Facebook are among the networks with the highest incidence of cyberbullying. Children on YouTube are most likely to be targeted, with a 79% likelihood.

Social media platforms, with their vast user bases and varied interaction methods, have unfortunately become fertile grounds for cyberbullying. This form of harassment manifests in several ways, exploiting the unique features of each platform to target individuals.

- Cyberbullying on Facebook and Twitter: These platforms are often used for spreading false rumors, offensive name-calling, and public shaming. Bullies may post derogatory comments on victims’ posts, send threatening private messages, or create fake profiles to harass individuals. Inflammatory tweets or posts can quickly gain traction, amplifying the hurtful content.

- Instagram and Snapchat Harassment: These image-centric platforms see cyberbullying in the form of body shaming, where users post negative comments on others’ photos. Additionally, the temporary nature of Snapchat messages can embolden bullies, as they feel their actions leave no permanent trace. Sharing embarrassing or private images without consent is another common form of harassment.

- YouTube Bullying: This platform witnesses bullying through hateful comments on videos, downvote campaigns, and even the creation of response videos to mock or disparage others. Content creators, especially younger ones, can be particularly vulnerable to these attacks.

- TikTok and Cyberbullying: On TikTok, users might experience cyberbullying through duets or stitches (features that allow users to add their video to another user’s content) that are used to mock or ridicule the original post. Additionally, comments on TikTok videos can be a breeding ground for bullying and hateful speech.

- Gaming Platforms and Cyberbullying: Online multiplayer games, while not traditional social media platforms, also see their share of cyberbullying. This includes in-game harassment, voice or text chat abuse, and doxxing (publishing private information about someone).

In all these cases, the anonymity and distance provided by the internet can embolden bullies, making it easier for them to engage in harmful behavior without immediate consequences. Cyberbullying on social media is particularly insidious because it can follow victims into their personal spaces, leaving them feeling vulnerable and exposed even in their own homes.

Cyberbullying on Facebook

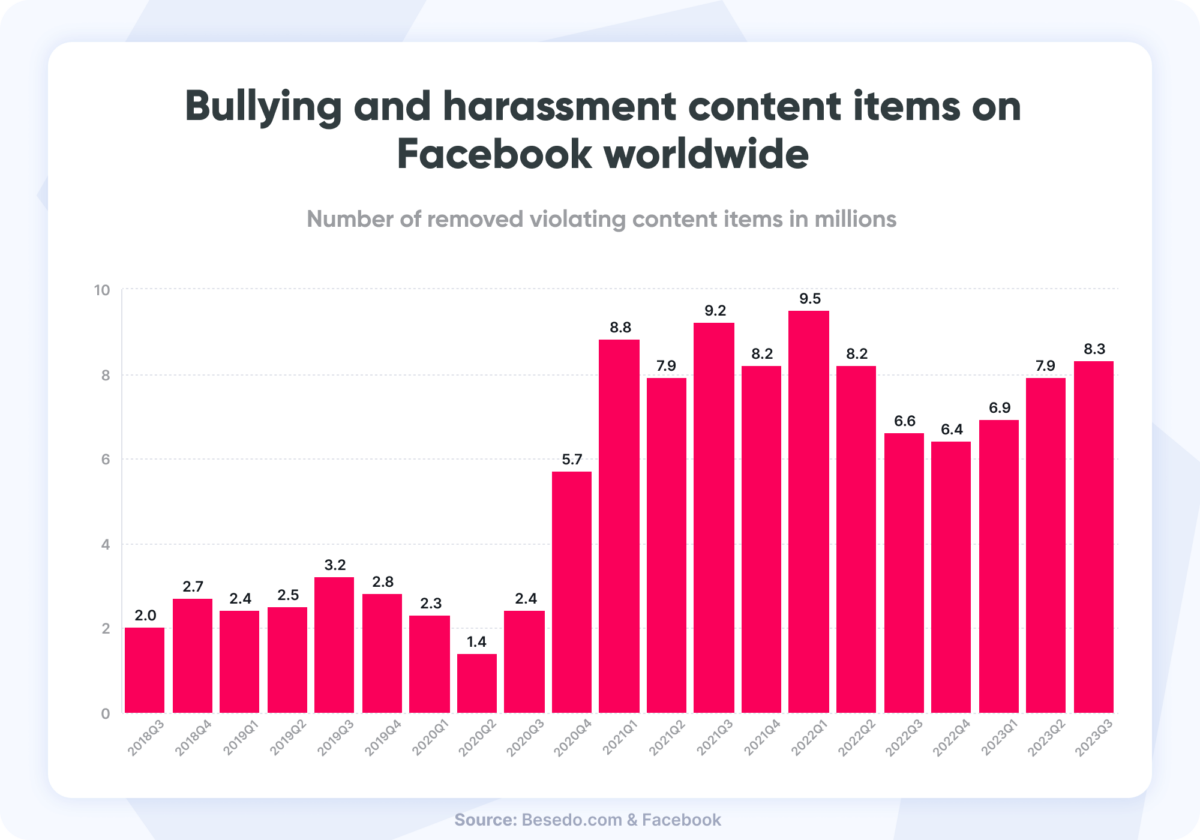

Thanks to the fantastic Community Standards Enforcement Report from Facebook, we pulled some interesting numbers. We created a graph showing the actioned bullying and harassment content items on Facebook worldwide from 2018 to 2023.

In Q3 2023, Facebook escalated its crackdown on online bullying, removing 8.3 million instances of such content, marking an increase from 7.9 million the previous quarter. This action reflects a peak in Facebook’s anti-harassment efforts since a record-setting first quarter in 2022, emphasizing the platform’s ongoing dedication to creating a safer online environment.

And this does not consider other forms of unwanted content like hate speech.

Other examples of cyberbullying

Examples of cyberbullying include spreading false rumors, sending unsolicited explicit images, and making physical threats. These actions can have profound psychological effects on victims, impacting their self-esteem, social interactions, and overall well-being.

How to prevent cyberbullying

For businesses and platforms that offer user-generated content (UGC), preventing cyberbullying is not just a moral responsibility but also essential for maintaining a positive brand image and user experience. Here are some strategies that can be effectively implemented:

- Implement Robust Community Guidelines: Clearly define what constitutes acceptable behavior on the platform. Community guidelines should explicitly prohibit cyberbullying, harassment, and any form of abusive behavior. Make these guidelines easily accessible and ensure they are enforced consistently.

- Develop Efficient Reporting Systems: Provide users with a straightforward, anonymous way to report cyberbullying and harassment. Make sure that the reporting process is user-friendly and inform users about what happens after they make a report.

- Utilize Advanced Content Moderation Tools: Implement automated content moderation tools to quickly identify and flag potentially harmful content. These tools use algorithms to scan for keywords, phrases, and patterns associated with bullying and harassment.

- Involve Human Moderators: Automated tools are efficient but can’t catch everything. Employ trained human moderators who understand context and nuances, making informed decisions about content that may not be overtly abusive but still harmful.

- Educate Users: Regularly inform and educate your user base about the importance of respectful communication. This could be done through pop-up reminders, educational content, or community events focusing on digital citizenship and empathy.

- Promote a Positive Community Culture: Foster an online environment encouraging positive interactions. Highlight and reward constructive and supportive user behavior to set a standard for community conduct.

- Regularly Update Moderation Practices: Stay updated with the latest trends in online behavior and continuously refine your moderation strategies. Periodically review and update your community guidelines and moderation tools to adapt to new forms of cyberbullying.

Content moderation and cyberbullying

Content moderation is vital in the fight against cyberbullying, serving as a cornerstone for safer and more engaging online communities. By identifying and removing harmful content, these systems create a welcoming environment for users and enhance the overall user experience. This fosters increased engagement and loyalty, which is crucial for the growth and success of digital platforms. Moreover, effective moderation protects a platform’s brand reputation, ensuring compliance with legal standards and promoting a positive public image.

Additionally, content moderation offers invaluable insights into user behavior and cyberbullying trends, aiding in the continuous improvement of safety strategies. It plays a significant role in encouraging diversity and inclusion by reducing harmful content and fostering a respectful, inclusive community.

Investing in content moderation is not just a step towards combating cyberbullying but a strategic move to enhance the platform’s quality and appeal to a broader audience.

Besedo: Distinguishing bullying from banter

Differentiating between bullying and banter, particularly in environments like online gaming where playful teasing is typical, is a complex task for content moderation companies. When another online gamer shouts, “I’m gonna kill you!” it doesn’t mean they will show up at your doorstep with malicious intent. It is hopefully and most likely part of a first-person shooter game or something similar.

The key to making this distinction often lies in using advanced Natural Language Processing (NLP) techniques, a branch of artificial intelligence (AI) focused on the interaction between computers and human language. We have extensively covered this on our engineering blog, but let’s now briefly explore the employment of NLP and other technologies:

- Contextual Analysis: NLP algorithms are designed to understand the context in which words or phrases are used. This means they can analyze the conversation flow to determine whether a comment is part of friendly banter or crosses the line into bullying. For example, mutual exchanges of jokes among friends are likely banter, while targeted, one-sided, aggressive comments are more indicative of bullying.

- Sentiment Analysis: This involves evaluating the emotional tone behind words. NLP algorithms can assess whether a message carries a negative, positive, or neutral sentiment. Comments that consistently exhibit negative sentiments, especially when directed towards a specific individual, may be flagged as potential bullying.

- Pattern Recognition: AI systems can identify patterns that are commonly associated with bullying, such as repeated harassment, use of certain derogatory terms, or escalating aggression in messages. By recognizing these patterns, the system can differentiate them from isolated instances of playful teasing.

- Machine Learning: Over time, AI systems learn from a large dataset of labeled examples of both bullying and banter. This continuous learning process makes the algorithms more adept at making accurate distinctions.

- User Relationship Analysis: Some content moderation systems consider the relationship between users. For example, a history of positive interactions between two users might suggest that a seemingly harsh comment is actually friendly banter.

- User Feedback and Reporting: Incorporating feedback from users is crucial. Suppose a user reports a message as bullying. In that case, this provides valuable data for the AI system to learn from and adjust its criteria for what might constitute bullying in the future.

A customized solution

However, it’s important to note that AI and NLP aren’t foolproof and can sometimes misinterpret nuances and cultural differences in language. That’s why many content moderation systems combine AI with human oversight. Human moderators can review flagged content to make final judgments based on cultural context, subtleties of language, and an understanding of social norms that AI might miss.

It just so happens that it’s exactly what we do here at Besedo.

Besedo’s technology actively discerns between bullying and banter. By continuously updating and improving our algorithms, we ensure a nuanced understanding of online interactions, protecting users from harmful content while allowing for playful banter.

Where to turn if you are the victim of cyberbullying

Victims of cyberbullying should seek support from trusted adults, school authorities, or legal entities. Resources such as the National Crime Prevention Council in your country provide guidance on dealing with cyberbullying and its effects.

Conclusion

Addressing cyberbullying is imperative for creating a safe online environment. Companies, especially social media platforms, play a critical role in this endeavor. Besedo’s content moderation solutions offer a robust approach to distinguishing harmful bullying from harmless banter, contributing significantly to the safety of digital communities.

Get in touch with us, and we can talk about a solution for your platform.

Ahem… tap, tap… is this thing on? 🎙️

We’re Besedo and we provide content moderation tools and services to companies all over the world. Often behind the scenes.

Want to learn more? Check out our homepage and use cases.

And above all, don’t hesitate to contact us if you have questions or want a demo.