Contents

Efficiency and accuracy are two of the most valuable KPIs online marketplaces track to evaluate their manual moderation performance. The key to an optimized manual moderation team is to find the right balance between efficiency and accuracy.

However, here’s the pitfall, if you push your moderators too hard to achieve efficiency, this can, in time, lessen their accuracy and jeopardize the quality of the content published on your marketplace. Low-quality content is likely to slip through the cracks threatening the reputation of your platform, damaging user trust, and putting your users at risk, varying from user experience issues to more serious threats such as identity theft or scams.

For your online marketplace to succeed and to keep potential issues at bay, it’s imperative to provide your moderation team with the right moderation tools to help them be as efficient and accurate as possible.

At Besedo, we continually seek to improve our all-in-one content moderation tool, Implio, by adding features to ensure your content moderators perform at their best.

Whether highlighting keywords, working with specialized moderation queues, or enabling quick links or warning messages, many features available in Implio are created to ease your moderators’ daily work and improve their overall performance.

Keyboard shortcuts – efficient manual moderation

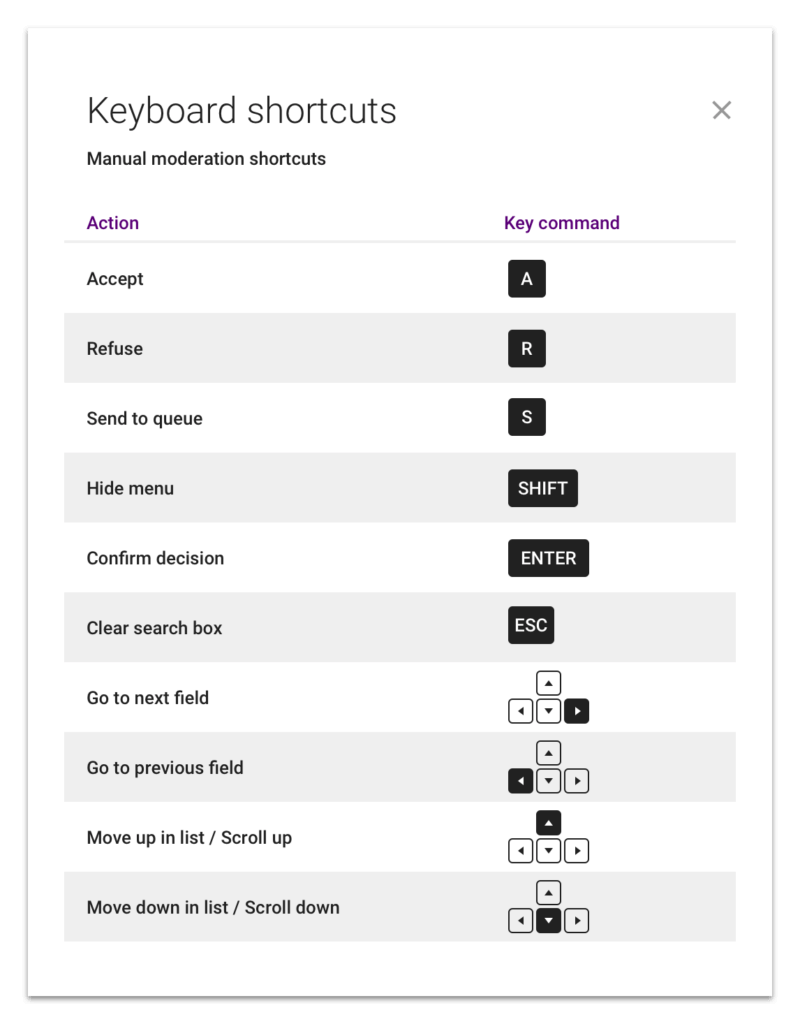

Implio’s brand new feature, Keyboard shortcuts, helps your moderators easily make decisions with a single click and navigate through listings without leaving their keyboard, making manual moderation both efficient and accurate.

From our initial tests, we found that keyboard shortcuts increased the manual moderation efficiency by up to 40%, and we expect to see that number increase as the moderators grow more familiar with the feature.

Here’s how the keyboard shortcuts work:

Ready to improve your moderation efficiency?

Get in touch with a content moderation expert today, or try Implio for free.