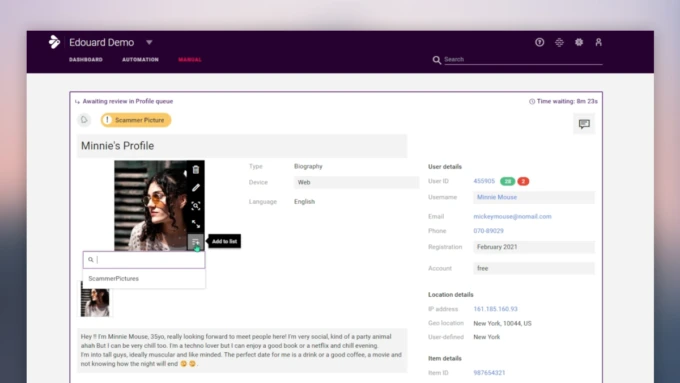

Outsmart Scammers with Implio’s Smart Lists

Simplify image moderation with Implio’s Smart Lists. Quickly flag and block altered images to outsmart scammers and protect your platform.

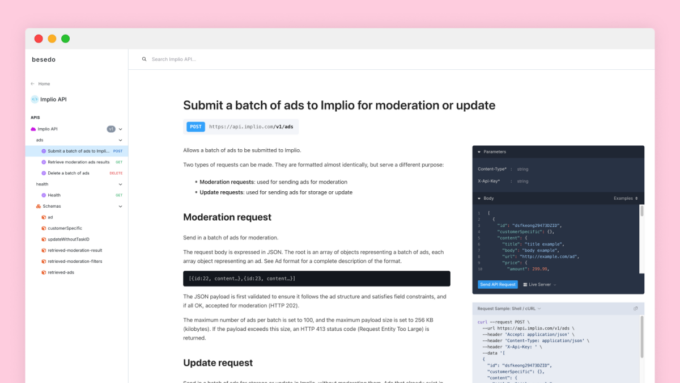

Introducing Our New and Improved API Documentation

Explore our newly updated API documentation to seamlessly integrate Besedo's powerful content moderation tools into your platform.

Introducing Besedo’s New Search Query Builder

Discover Besedo’s new Search Query Builder, designed to simplify content moderation. Quickly filter moderation decisions, find refusal reasons, and track actions by agents.

Announcing Our Reporting Feature: Download and Visualize Your Data

Announcing Besedo reporting! Download and import your data into your favorite business intelligence tool to create all sorts of graphs, charts, and data magic.

New Implio feature: Introducing video and audio content moderation capabilities

New Implio Feature: Moderation Notes

New Implio Feature: Misoriented Pictures Added to Image Vision Modules

New Implio Feature: Quick Add to List