Contents

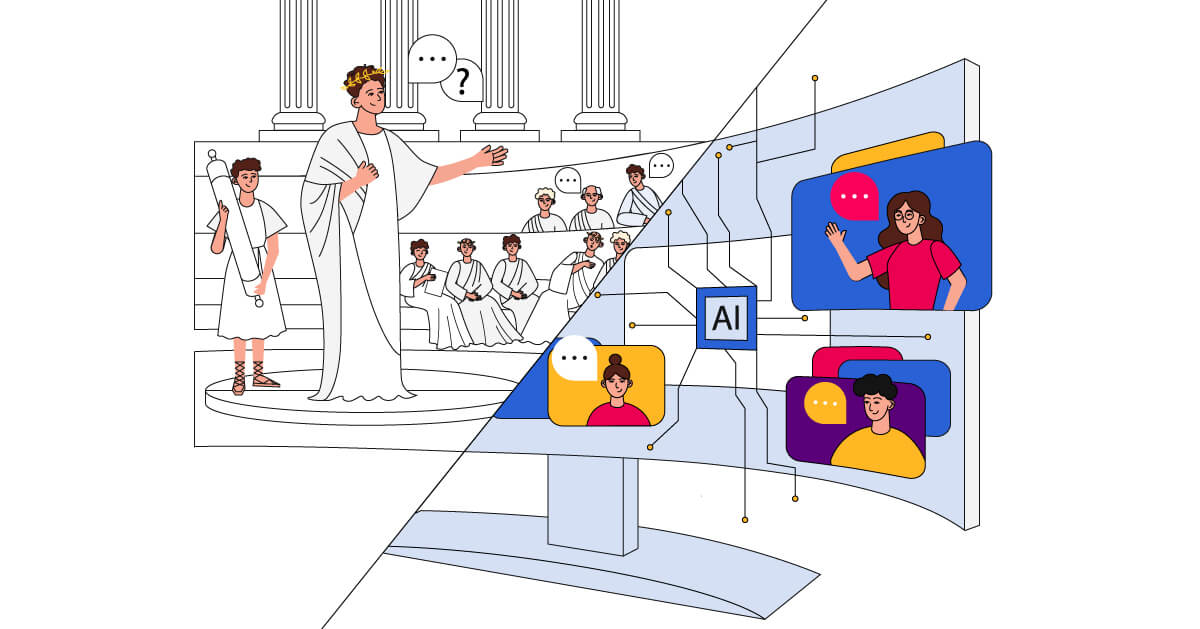

Mention the word ‘forum’ to someone today. Their immediate thought will certainly be of an internet forum, with its members discussing anything from astrophysics to finding cheap offers in supermarkets. It’s a way of using the internet that goes back to the network’s very earliest days: even before the World Wide Web, early adopters talked and argued through services like USENET. And, while much online conversation has moved to social media and other spaces, it’s fair to say that forums laid the groundwork for those platforms, establishing expectations about how online interaction operates.

In short, it’s easy to talk and think about forums without being conscious of the word’s original meaning: in the Roman Empire, a forum was the public space built at the center of every town or habitation. Established principally as a place for people to buy and sell goods, the forum became the heart of Roman social life, as a place to meet, chat, and debate. Often, the modern idea of the Roman forum is one of the political and philosophical discussions – or, often, arguments.

Moderation through the ages

Just as behavior in a Roman forum would have been kept in check by social standards and peer pressure, early online forums relied on oversight from individual users with special privileges volunteering to maintain safety and civility.

Of course, the speed and scale of online conversation soon outstripped individuals’ abilities to keep up, and more formal solutions had to be found. Professional moderation teams are now common, and meeting the challenge also necessitated moves towards automated moderation, first in the form of word filters and, more recently, with AI-based approaches, an always-on solution to an always-on problem.

What does it mean to always be on for online speech? These systems don’t just need to oversee speech; they also need to adapt and learn to keep pace with the way language (especially on the internet) is adapted and evolved. ‘Forum,’ after all, is not the only word that has had its meaning changed by the digital age: one of the remarkable things about the internet is the speed at which it generates new words and new meanings for old words.

The crux of this problem lies in that, while automated digital systems tend to categorize the world into neat boxes, such as ‘acceptable’ and ‘unacceptable’ speech, language is fundamentally ambiguous.

For instance, the dictionary definition of the word ‘dead’ would make it a fairly unambiguously negative piece of speech. Posted as a reply under a joke, it (or a skull emoji) would actually signify an exaggerated way of saying that the user found it hilarious. This kind of ambiguity is rife, too, in online gaming, where ‘gg,’ meaning ‘good game,’ is used as a virtual handshake with one’s opponent. After a particularly one-sided match, saying ‘gg’ might be deeply antagonistic behavior.

While these are relatively light examples, the same pattern can be found in the darkest areas of online speech, where hate groups who are aware of the attempts that content moderators make to keep them out of communities will frequently change how their language is coded to disguise their intent.

The next frontier

All of this is coming in the context of a level of activity that, across the internet, amounts to billions or trillions of daily interactions. In these kinds of edge cases, the posted content can be too new and variable for AI-powered solutions to respond to and too voluminous for human operators to keep up with.

This is something which, as we look to a healthier future for content and content moderation, the industry as a whole will need to work on and take seriously. Historically, businesses have tended towards being over-cautious, preferring to block safe speech than accidentally allowing unsafe speech. However, the tide is turning on this route, and we think the next frontier for content moderation will be to take a consultative approach toward more subtle solutions.

As we talk to customers, we’re always keen to learn more about how their users use language, where it slips through the cracks of the systems we’re building, and what insight they would need to manage user interactions more effectively. If you’d like to join the conversation, we’d love to hear from you, too.

In the meantime, the most effective approaches will draw on the full content moderation toolbox, applying word filters, AI oversight, and human intervention, where their respective strengths are most impactful. As we do so, we are building the insight, expertise, and experience to deliver an approach to content moderation that is fully alive with how language evolves.