Trustworthy interactions for confident sharing

The future is shared. From rides to residences, the Sharing Economy thrives on mutual trust.

Sharing is more than a transaction; it’s an experience. With Besedo by your side, ensure every interaction on your platform radiates trust and authenticity. Elevate the essence of sharing, making every connection safe, genuine, and memorable.

We’re here to ensure that every share, swap, and sale on your platform is authentic and safe.

Elevate sharing. Simplify trust.

User trust isn’t a luxury; it’s your growth engine. Protect Personal Identifiable Information (PII) to ensure platform leakage remains a non-issue. Streamline secure payments, cutting friction while bolstering confidence.

And when it comes to user reviews? Authenticity wins. Prioritize real feedback from genuine users.

Make every shared interaction a step towards optimized growth.

“We’ve improved moderation accuracy by over 90%, the number of items detected by 600%, and reduced the manual review time by 17%”

— Jimin Lee, Director of Trust & Safety

Sharing and caring

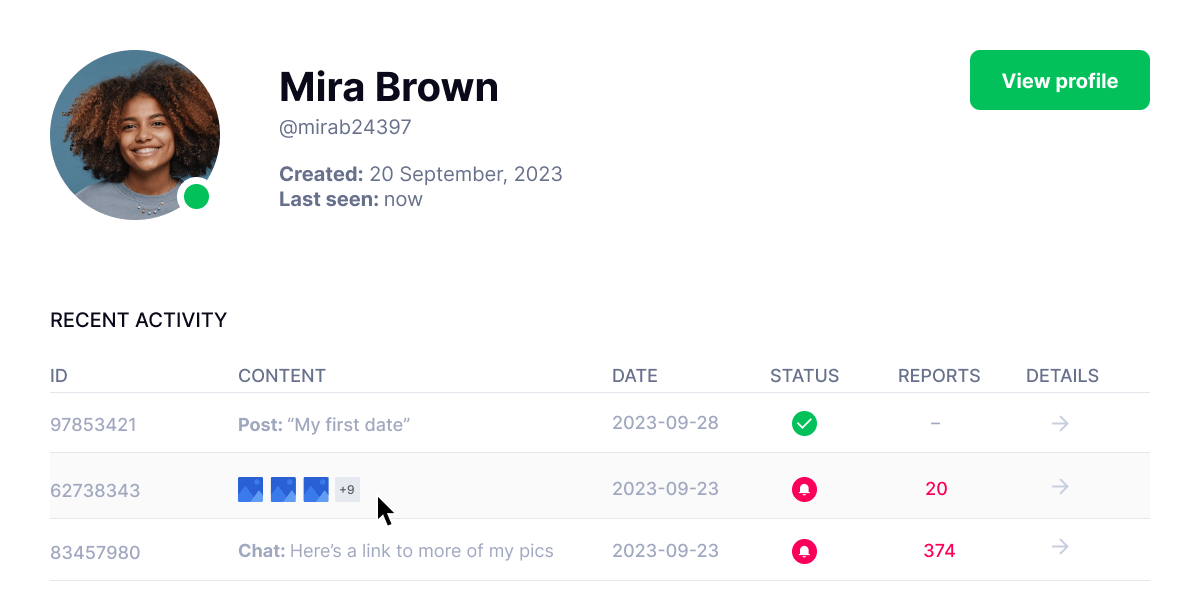

Protecting your user data and fostering respectful interactions take center stage. Besedo customers implement machine learning models and filters to identify, flag, and remove harmful content and profiles instantly.

Choose from pre-built filters or train your Besedo AI to detect users and behaviors that are unwanted. Detect nudity, remove hate speech, underage users, and much more.

Learn more about our use cases →

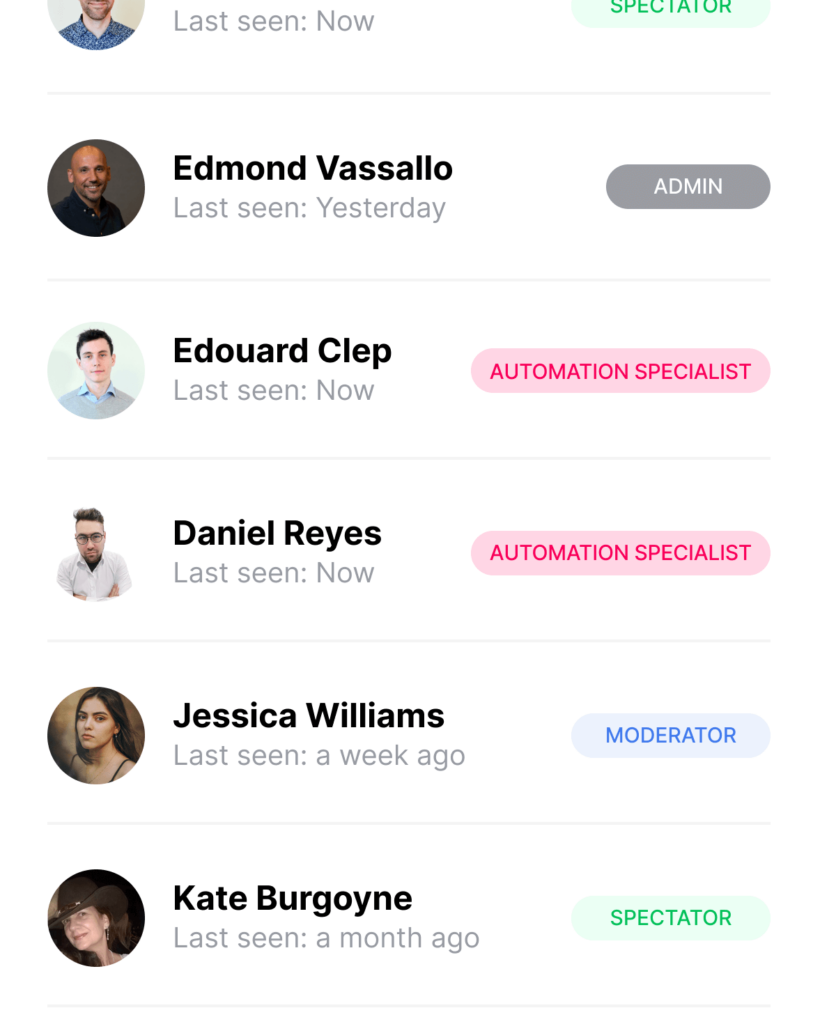

Teamwork makes the dream work

Set up individual accounts for your entire team so you can collaborate. Forget tinfoil hats – we’re in the business of no-nonsense security.

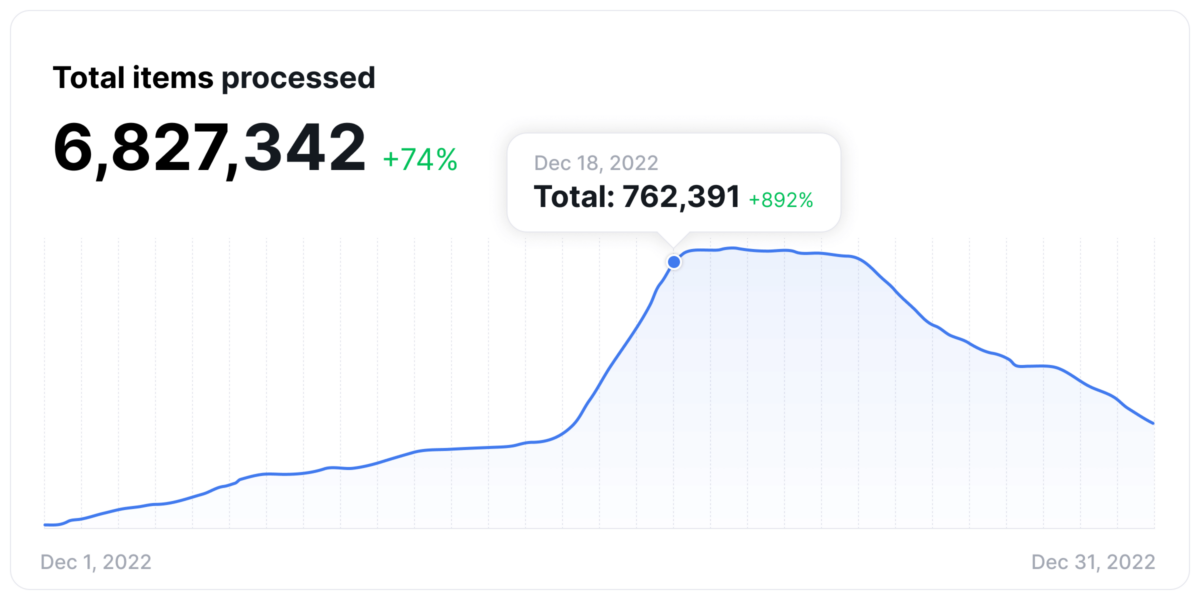

Content moderation that scales with you

Besedo scales effortlessly with spikes on your dating app so you can handle even the most challenging times.

No need to worry about staffing to make sure your platform is moderated in real-time.

Everything has been taken care of.

Trusted by some of the world’s most innovative companies

Make your Sharing Economy site safer

You shouldn’t worry about platform leakage or fraudulent transactions. Content moderation from Besedo sits as a layer between your platform and the end-user to secure the experience with your service. Make every shared interaction a step towards optimized growth.

Prevent platform leakage

Take action when your users leave your platform for other risky apps. Ensure their safety and that the transaction is carried out on your site.

Genuine reviews matter the most

27% of people will trust reviews – but only if they believe them to be authentic. Safeguard your site from fake reviews to avoid user churn.

Prevent fraud and scams

Guard your marketplace reputation from scammers and bad actors. Use Besedo’s state-of-the-art specialist fraud prevention tools and filters.

Resources

See allExplore all resourcesTalk to our experts

- Powerful customized solutions combining AI & humans

- Unrivalled innovation based on over 20 years of experience

- Global support and multilingual moderation

- Easy API integration through RESTful HTTP

- User-friendly and powerful control panel