Sportsmanlike conduct for the next generation of competitive gaming

We keep players safe and secure and use quality player experience to drive gamer retention.

Behind the thrill of the game lurks the shadow of online toxicity—hate speech, threats, and doxxing that can sour the experience.

Ensure that gaming stays fun with carefully tailored moderation that makes every player feel welcome and safe.

A first-class gaming experience

Creating an online game is not just about coding, sound, and graphics—it’s about crafting an experience, an ambiance, an escape. But the perfect escape can be muddled by unwelcome voices echoing from corners like in-game chats, Discord, Reddit, and beyond.

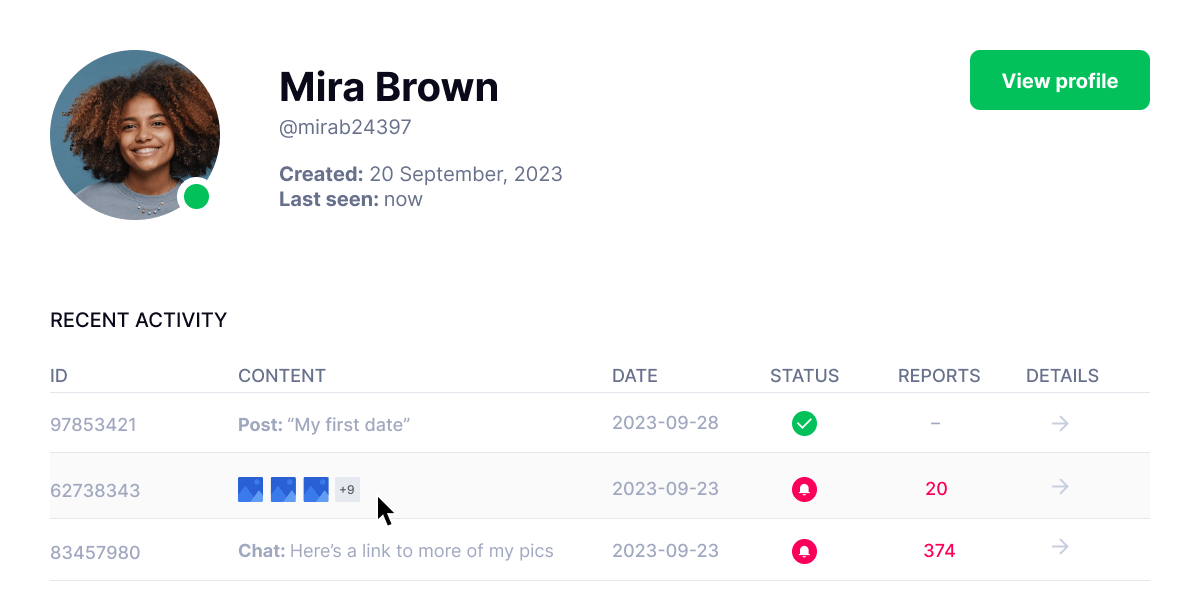

Our tools are designed for you to swiftly identify, understand, and address negative users. Get metrics that offer insights from the origins of these disruptions to their patterns within your game.

Like a metronome for your trust and safety.

“Besedo’s solution is customized to fit our needs exactly. I have never met a team more passionate about their product.”

— Markus Bähr, Director Customer Success

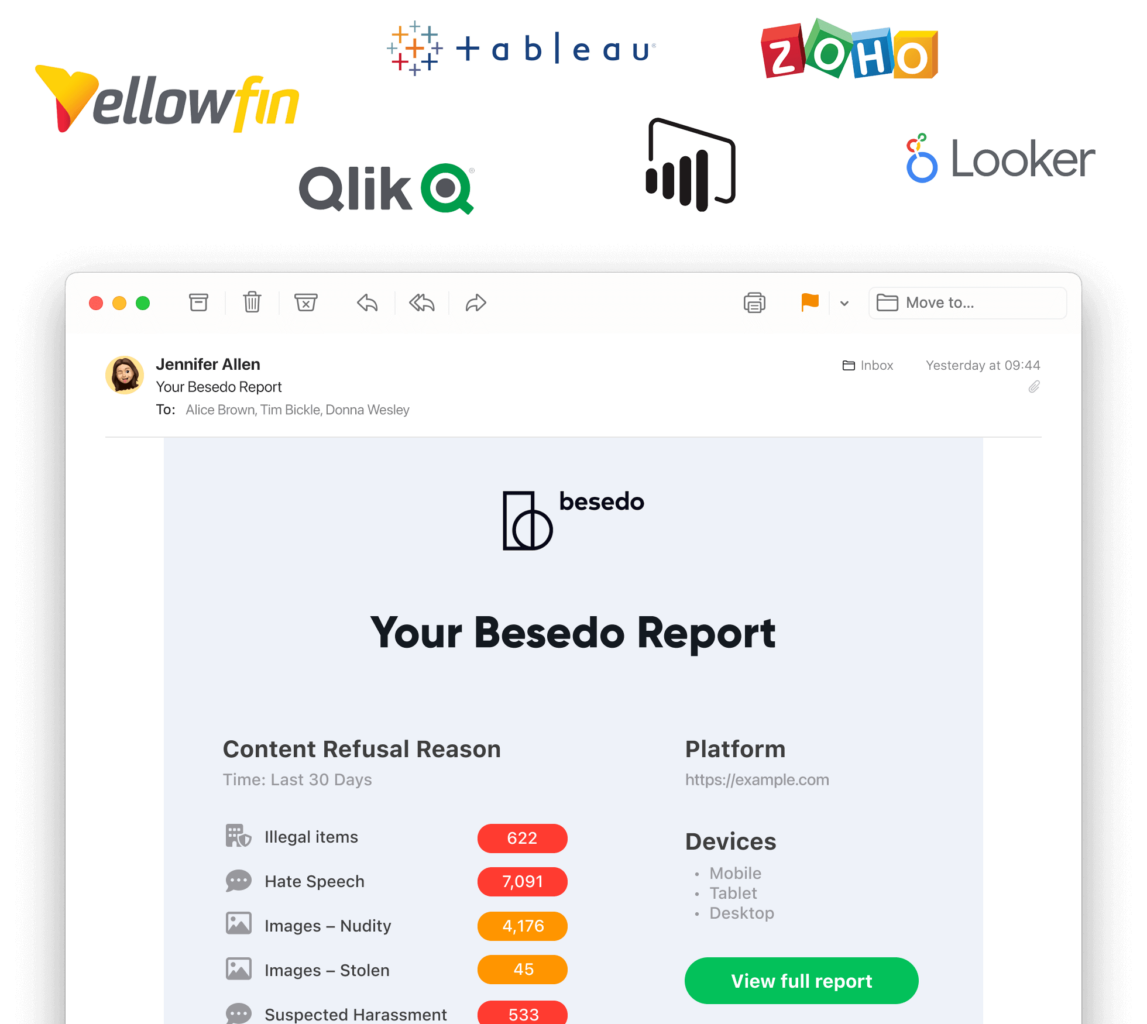

Get reports to your inbox

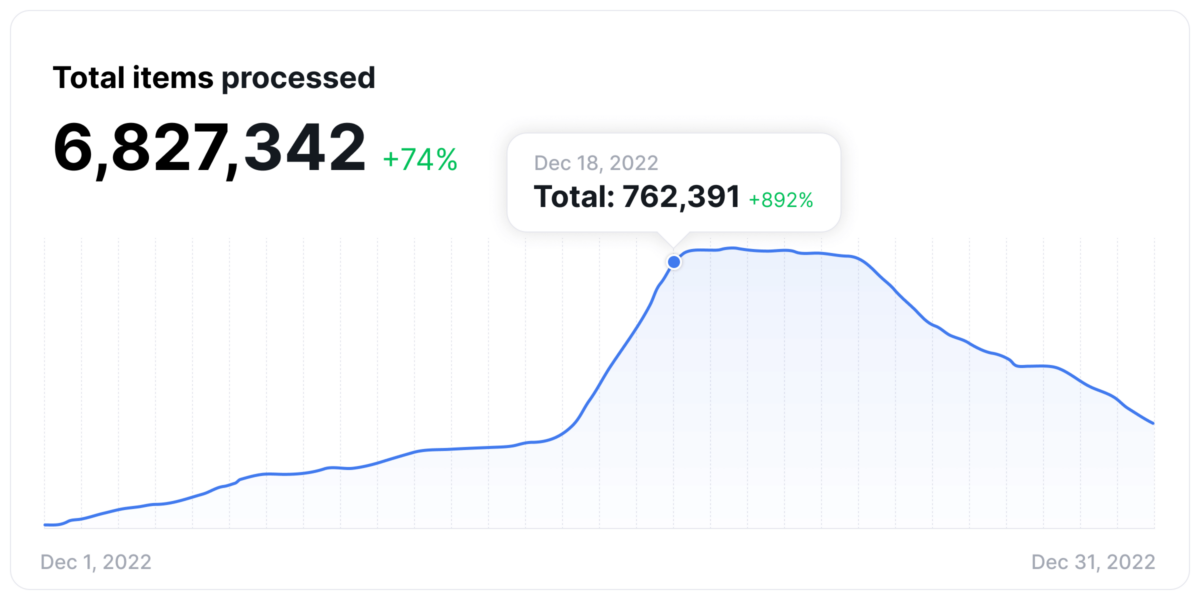

No matter if your site or app has little user-generated content or billions of items – insight makes a difference. Dive into a detailed analytics overview of all your user-generated content.

Import the reports into your favorite business intelligence tool. This will allow you to dive deeper into the data and create all sorts of graphs and visualizations.

Ready player 2, and 3, and…

Set up individual accounts for your entire team so you can collaborate. Forget tinfoil hats – we’re in the business of no-nonsense security.

A platform that scales with you

Besedo scales effortlessly with spikes on your marketplace so you can handle even the most challenging times.

No need to worry about staffing to make sure your platform is moderated in real-time.

Everything has been taken care of.

Trusted by some of the biggest gaming platforms in the world

Ensure users can enjoy your game to its fullest

Users love to chat in real-time while they play. Make sure they can have the best multiplayer experience with strangers and friends alike.

Add profanity filters

Profanity filters enhance user experience and online gamers. They can keep users happy and engaged by blocking profanities and inappropriate comments.

Detect bullying and harassment

Our machine learning can differentiate between banter and what is a real threat. Modern content moderation balances freedom and safety.

Catch scams and frauds

Our real-time moderation will alert you of any attempts by scammers to bypass your terms of service and fraud your users. Keep everyone safe and happy.

Resources

See allExplore all resourcesTalk to our experts

- Powerful customized solutions combining AI & humans

- Unrivalled innovation based on over 20 years of experience

- Global support and multilingual moderation

- Easy API integration through RESTful HTTP

- User-friendly and powerful control panel