Contents

At Besedo, we care deeply about trust, safety, and user experience. We surveyed 2,000 American gamers aged 18-45 to understand how toxicity impacts gaming. Our research highlights gamers’ critical challenges and demonstrates why gaming platforms need stronger moderation efforts.

Executive summary (TL;DR)

We surveyed 2,000 gamers in the United States to understand how toxicity impacts online multiplayer games. The results are clear and deeply concerning:

- 77.75% of players have experienced harassment while gaming online.

- 52% of women say they’ve stopped playing a game due to harassment or a toxic community.

- 85.25% believe platforms should actively moderate hate symbols, slurs, and explicit content.

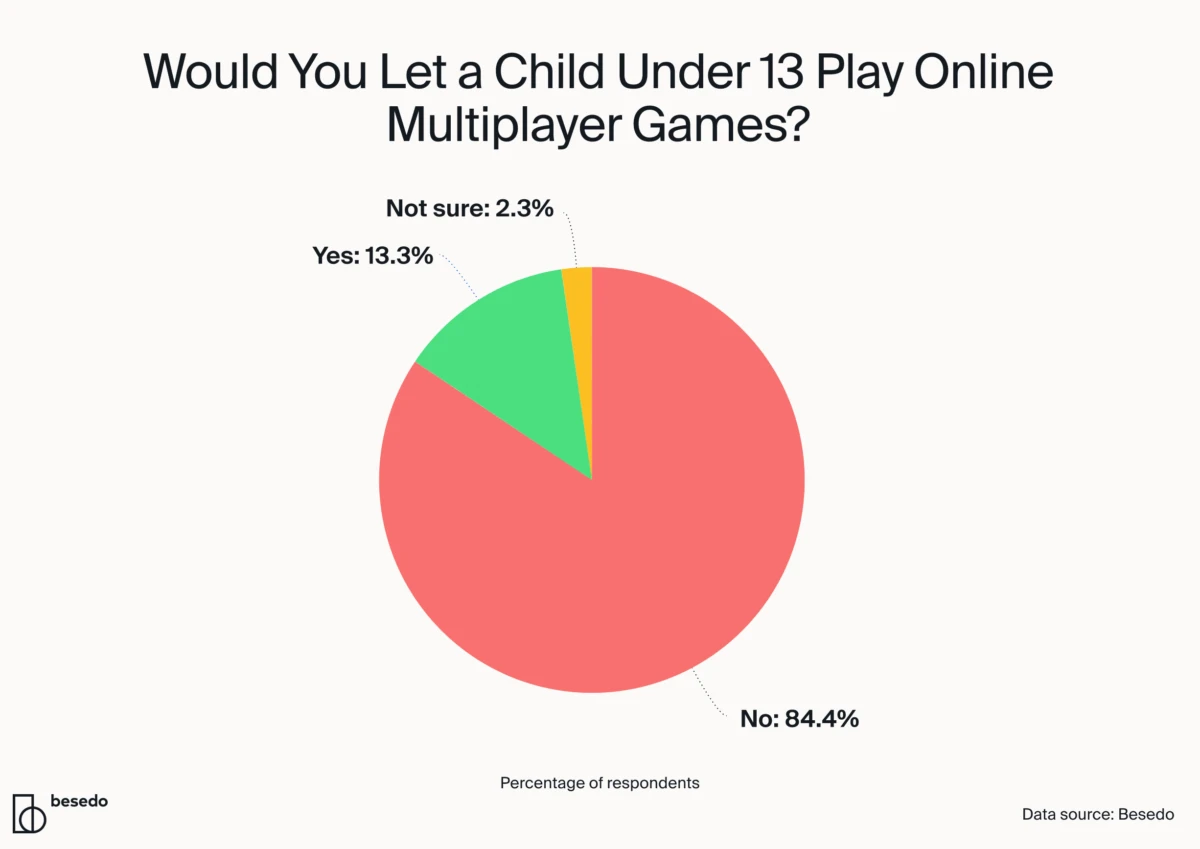

- 84.4% of respondents would not let a child under 13 play online multiplayer games.

- 58.8% of players mute or block toxic users. Nearly 30% avoid certain platforms altogether.

Harassment is normal in gaming (and players are fed up)

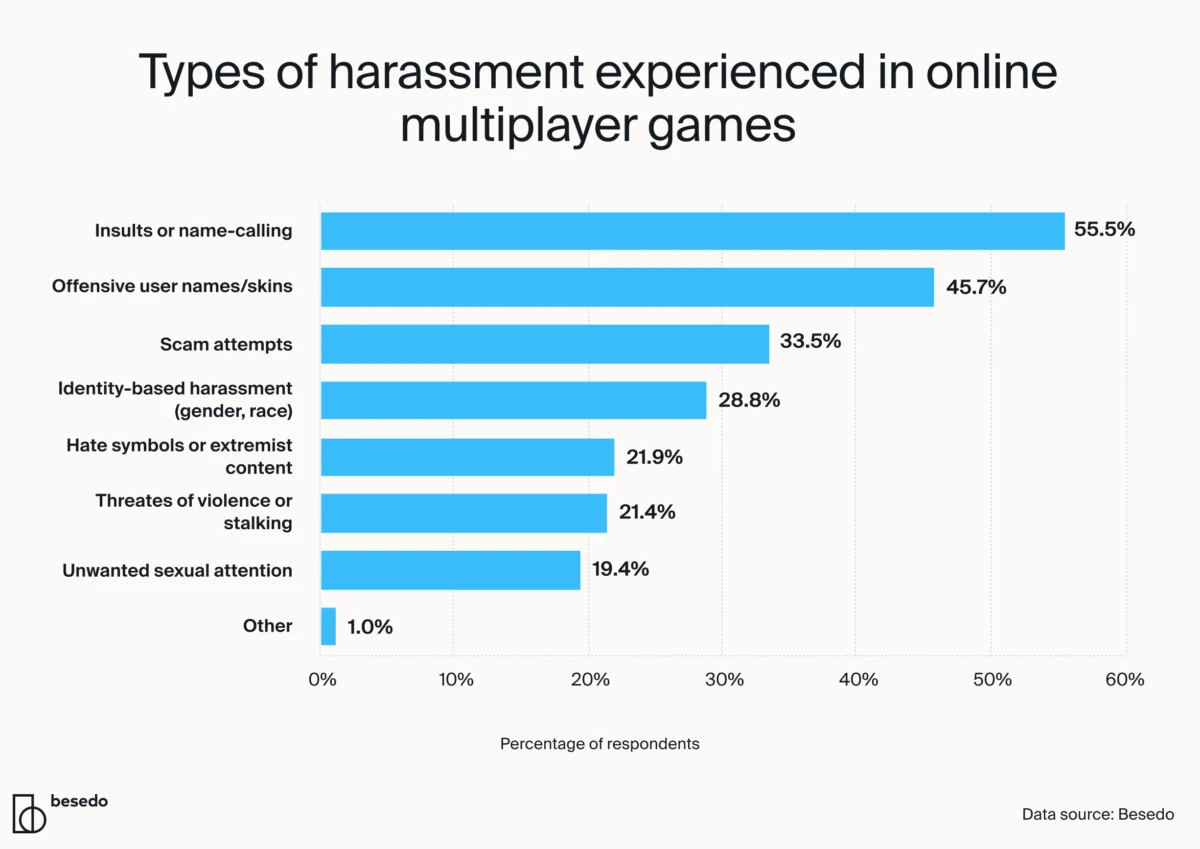

Our survey revealed that nearly 78% of gamers have experienced some form of harassment online. That means almost 8 in 10 gamers regularly encounter insults, identity-based harassment, unwanted sexual attention, threats, or offensive content like usernames and player skins.

Sadly, this finding isn’t unique. According to the Anti-Defamation League’s 2023 report, 76% of adult gamers reported similar harassment experiences. As the Anti-Defamation League grimly noted, “normalized harassment and desensitization to hate frame the reality” of online gaming today. In other words, abuse has become expected – even “normal” – in many game communities, a status quo that poses serious consequences for players and the industry.

Women face severe challenges in online games

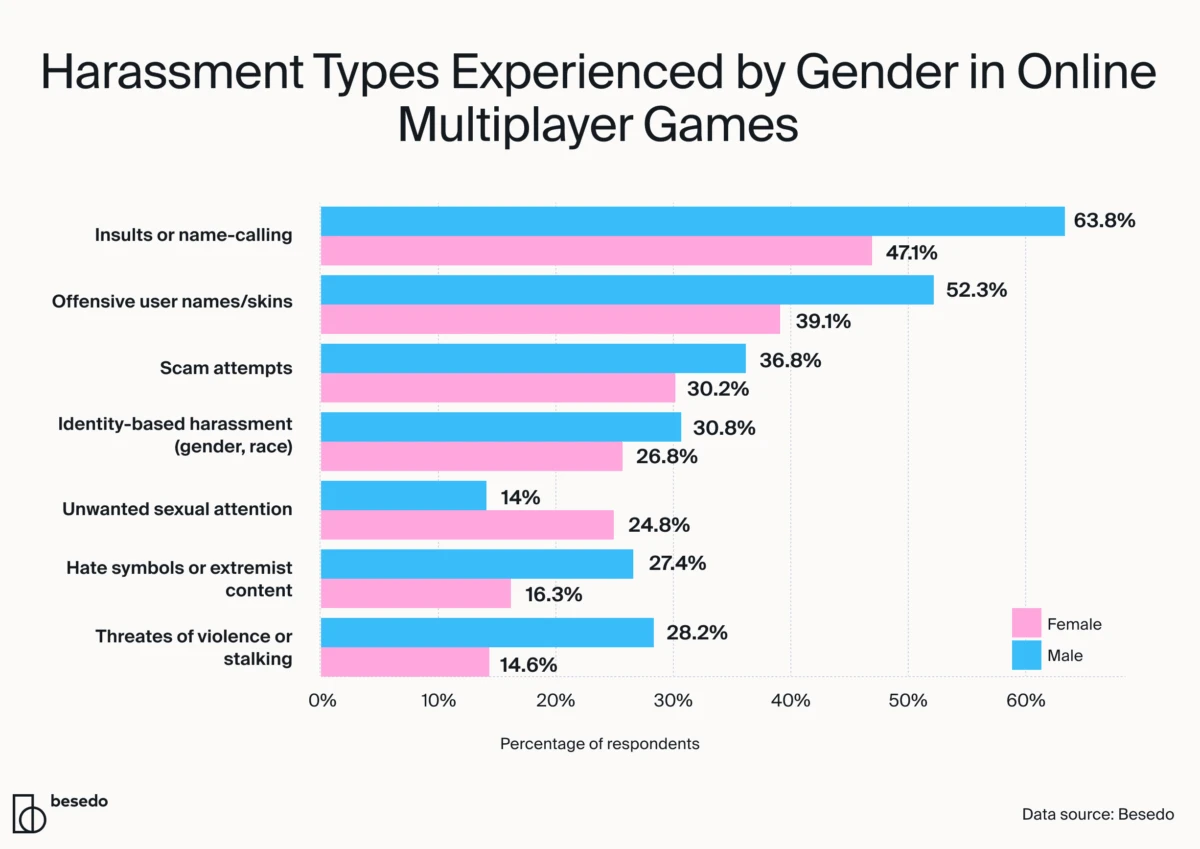

Harassment affects everyone, but our data clearly shows significant gender differences. Women experience notably higher rates of unwanted sexual messages (25%) compared to men (14%). On the other hand, men reported significantly higher rates of insults or name-calling (64%) compared to women (47%).

Many gamers adapt by muting voice chats, avoiding random matchmaking, or playing only with friends. More troubling, a significant portion are walking away entirely from games they once enjoyed.

Alarmingly, more than half (52%) of women said they stopped playing games because of harassment or toxic communities. This means a game studio could literally lose half of its female players due to an unsafe environment. You don’t need a Business Degree to understand that this is incredibly bad for business.

These gender differences are important because they show how targeted harassment can dramatically impact player retention and brand reputation.

Behavioral impact: Toxicity is changing how gamers play

Harassment doesn’t just upset gamers. It actively changes how they play and interact. Our survey reveals clear coping mechanisms players adopt. Nearly 59% of players mute or block toxic users, 30% actively avoid certain communities, and 28% quit mid-game.

- 58.8% mute or block toxic players.

- 29.8% actively avoid certain communities or platforms.

- 28.3% quit game sessions or matches mid-game due to harassment.

- 27.2% stopped using voice or text chat altogether.

- 12.4% completely stopped playing certain games.

These behavioral changes directly impact the social experience and community engagement, two crucial pillars of successful online gaming platforms.

Players are quitting

This phenomenon is echoed in industry-wide research, which states that 7 out of 10 gamers have avoided playing at least one game due to the game’s toxic reputation or community. That is a huge chunk of the player population deliberately steering clear of specific games because of how players behave in those spaces. A toxic reputation can become a kiss of death for an online title.

No matter how great the gameplay is, if the community is known for harassment, many potential players will simply stay away.

The kids aren’t alright

Here’s another startling insight: only 10% of respondents felt comfortable letting children under 13 play online multiplayer games. Let that sink in: 90% of adult gamers, many who grew up gaming themselves, believe these spaces are too toxic for kids.

This speaks volumes about how toxic the average game lobby has become. When even seasoned adult gamers are wary of exposing children to the standard multiplayer experience, it’s a damning indictment of the status quo.

Parents have reason to be concerned: Abuse and bullying in games can start at a very young age. Recent studies show that three-quarters of teens and pre-teens (ages 10–17) experienced harassment in online games, a sharp rise from the previous year. The kinds of slurs, sexual comments, and hate imagery that proliferate in some games are absolutely not what most parents want their kids to witness or endure.

This finding should resonate with industry leaders on two levels. First, ethically, it’s alarming that online games often have teen or even “Everyone” ratings – environments where children face such hostility. Gaming is mainstream entertainment for youth, yet we effectively say many popular titles are unsafe without heavy supervision. Second, strategically, think about the long-term business implications: today’s kids are tomorrow’s customer base.

If most adults wouldn’t let their kids into your online ecosystem, you’re seeding a future audience problem.

The gaming industry has long focused on cultivating the next generation of fans, but toxicity is blocking the on-ramp. For platform owners, this is yet another signal that cleaning up online spaces isn’t just a moral duty – it’s necessary to secure the trust of families and the market’s longevity.

Players demand action: Moderation is a must

If there is a silver lining in all this, it’s that gamers themselves overwhelmingly want change. Gamers overwhelmingly believe that the responsibility for cleaning up online spaces lies with the platforms and publishers who run them.

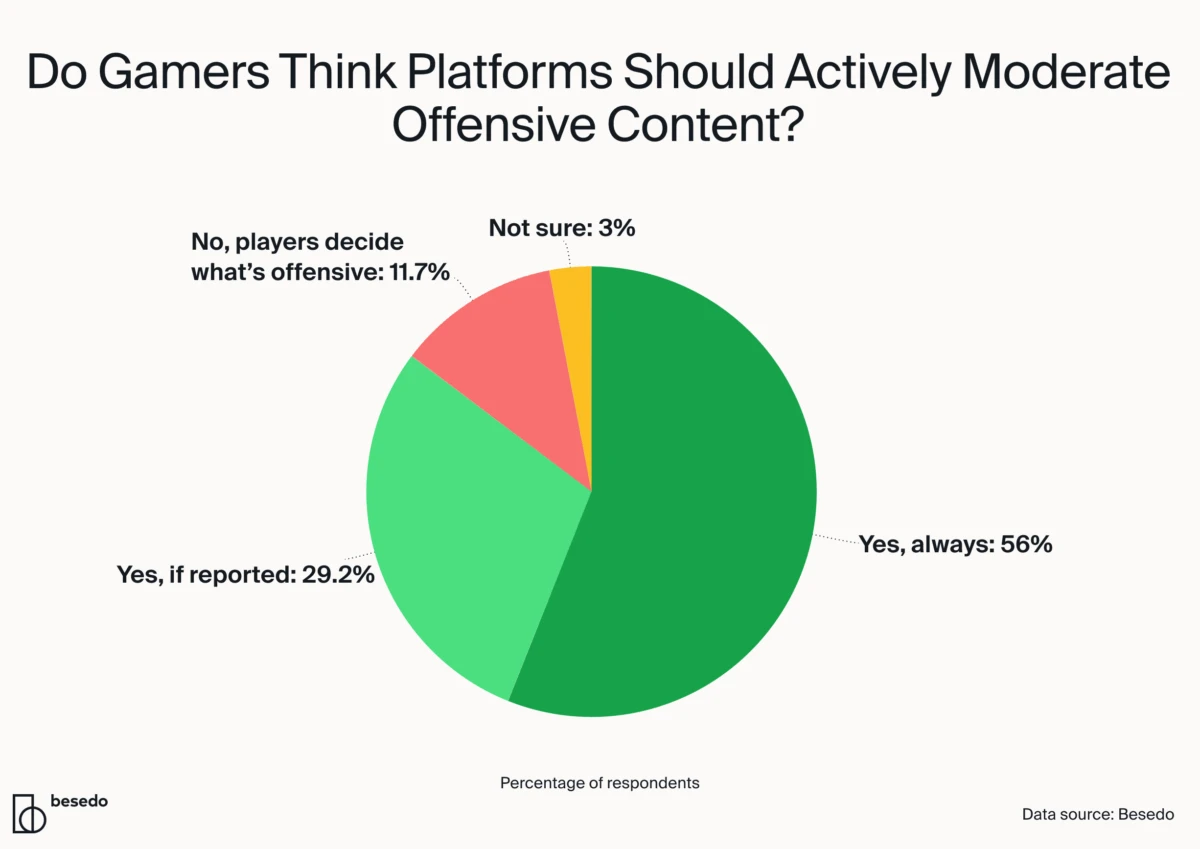

Our survey found that 85.25% of respondents believe gaming platforms should actively moderate offensive content (such as hate symbols, slurs, or sexually explicit mods/skins).

In fact, over 56% said “Yes, always” – platforms should always be proactively removing hateful content – and another ~29% said “Yes, but at least when such content is reported.” Only a tiny sliver disagreed or wasn’t sure.

The takeaway is unmistakable: most players want companies to take a more active role in fighting toxicity.

This is echoed by other industry research. According to a Unity Technologies report, 77% of multiplayer gamers believe that protecting players from abusive behavior should be a priority for game developers.

The days of a hands-off approach (“we just make the game, players can police themselves”) are over – if they ever truly existed. Players aren’t asking for censorship, they’re asking for safety. If platforms clearly enforce rules against harassment, players will reward them with loyalty, more engagement, and increased spending.

Leading figures in gaming have started to openly acknowledge this responsibility.

“Xbox Live is not a free speech platform… It is not a place where anybody can come and say anything”

— Phil Spencer, CEO of Microsoft Gaming

Statements like this are important – they set the tone from the top that toxic behavior is not welcome on major platforms. Other companies have followed suit with initiatives like cross-platform bans for the worst offenders, AI-driven moderation tools, and enhanced reporting systems. And we know quite a lot about AI-driven moderation tools ourselves.

However, words and isolated features aren’t enough if enforcement is inconsistent or a company’s stance isn’t communicated to the player base.

The end goal isn’t to play nanny or ruin the fun – it’s to cultivate communities where all players can have fun without fear of harassment. Given that almost 1 in 10 players have encountered overt extremism (like Nazi symbolism or racial hate) in games, there’s no question that some content has no place in our lobbies and needs to be actively stamped out.

Technology’s role in safer gaming communities

While gamers overwhelmingly want platforms to moderate harmful content, doing it at scale is no small feat. Millions of interactions happen every minute across chats, voice, and user-generated visuals. Manual moderation alone can’t keep up.

This is where AI-powered moderation comes in.

According to Apostolos Georgakis, our CTO at Besedo, real-time, AI-driven content moderation combined with strong policy enforcement is the robust solution online gaming desperately needs. “Real-time is critical,” he says. “It punishes violations immediately, unlike delayed manual moderation, which rarely changes behavior. AI can also adapt to evolving language, subcultures, and slang much better than rule-based systems.”

Apostolos explains that smarter workflows, automated and tiered by severity, allow platforms to respond faster and more fairly. “A safer user experience leads to more enjoyment, stronger communities, and ultimately, more engagement and revenue,” he adds. “The tech isn’t just protective. It’s also a growth lever.”

Of course, implementing this kind of system isn’t plug-and-play. “The biggest challenge is balancing real-time accuracy with infrastructure that scales affordably,” Apostolos says. Moderating images, voice, and video content in real time requires a different architecture than batch- or queue-based moderation systems. “You need compute-efficient transformer models, smart latency management, and retraining pipelines that minimize false positives and negatives in an ever-changing context.”

Harassment is a business issue

Harassment and hate aren’t merely “social issues” – they have direct commercial consequences. If nearly half of a player base quits or avoids a game due to toxicity, any plans for growth or monetization are basically dead on arrival. And one cannot upsell content to players who have already left.

“We’re increasingly seeing user-generated content in games take unexpected turns, things like custom football jerseys or in-game designs that platforms never anticipated would go south,” says Axel Banér, Chief Commercial Officer at Besedo.

“If users have a bad experience, whether it’s harassment in a game, scams on a marketplace, or hate speech on a social platform, retention becomes nearly impossible. User-generated content is a huge competitive advantage. The challenge is enabling that creativity while putting the right safeguards in place to avoid bad press and keep communities safe,” adds Axel before concluding, “But there are ways to stay ahead of it. We’ve built solutions that help platforms detect and prevent this kind of content before it becomes problematic.”

Players spend 54% more on games they perceive as safe and non-toxic. Creating safer spaces isn’t just morally right; it’s financially smart. Every unchecked bigoted rant or sexist attack might drive away dozens of other players, shrinking the total user pool and revenue potential in the long run.

The data backs this up. Our survey results highlight the lost opportunities: when players quit a game (or never join in the first place) due to a hostile environment, any money they might have spent on in-game purchases, battle passes, or expansions disappears as well.

Consider these findings from other studies and industry analyses: 20% of gamers (teens and adults) have reduced their spending on a game because of in-game harassment. Conversely, players are willing to spend more in games that feel safe – one analysis found gamers spend 54% more on titles they perceive as “non-toxic”.

Healthy communities drive deeper engagement, session length, and, yes, monetization, while toxic communities stifle all of the above.

Methodology

The fine print, the nuts and bolts, or the nitty gritty, if you will: We surveyed 2,000 gamers in the USA aged 18-45 during April and May 2025. Respondents were evenly split: 50% female and 50% male. All participants confirmed they are active players participating in online multiplayer games.

If you’re a reporter, blogger, or content creator then feel free to reach out to us and we’ll set you up with a nice media kit of images, insights, and our team is happy to provide unique insights tailored for your audience. Hit us up on press@besedo.com

Ahem… tap, tap… is this thing on? 🎙️

We’re Besedo and we provide content moderation tools and services to companies all over the world. Often behind the scenes.

Want to learn more? Check out our homepage and use cases.

And above all, don’t hesitate to contact us if you have questions or want a demo.