Contents

Dealing with fake accounts is a challenge for any social media platform, and LinkedIn is no exception. The question is how well they are doing. We dug through reports from LinkedIn and found that there has been a huge uptick in fake accounts in recent years.

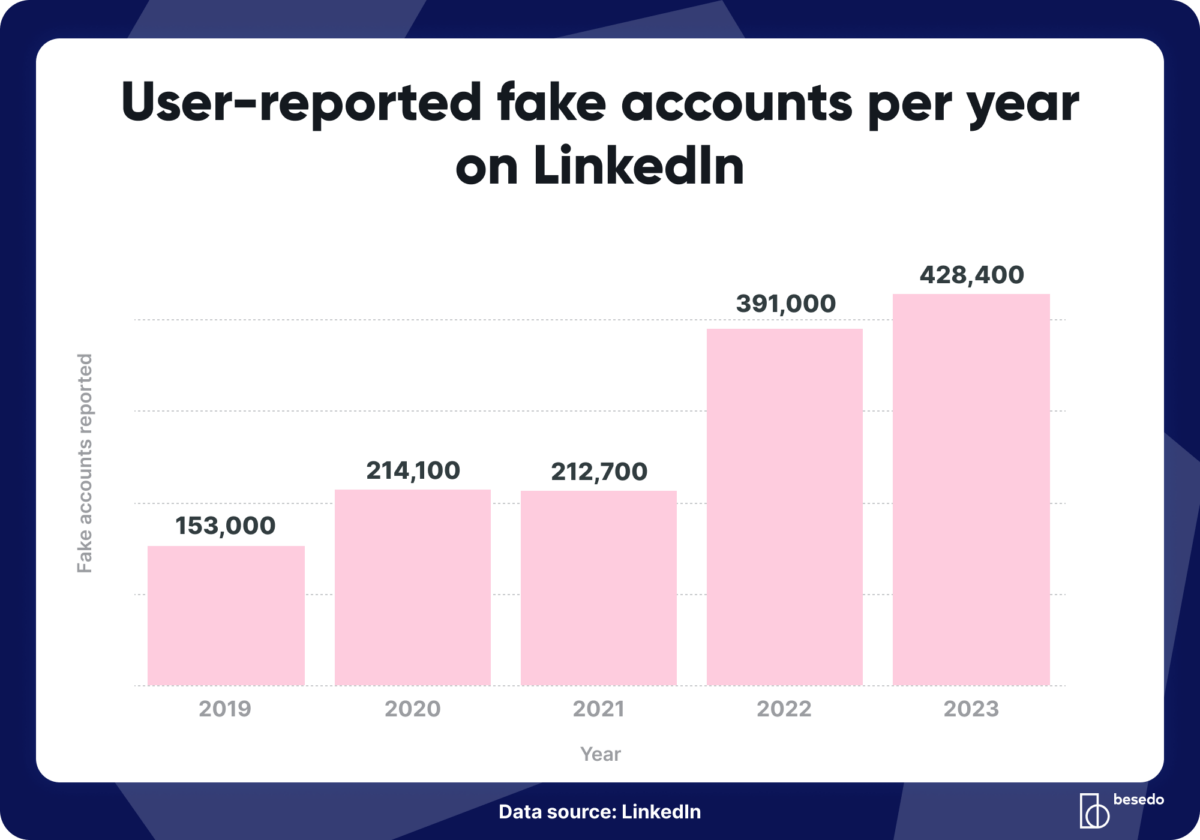

Fake accounts reported by users are up, big time

LinkedIn’s reporting divides fake accounts it’s removed into three categories:

- Fake accounts blocked automatically during registration.

- Fake accounts proactively restricted by LinkedIn after they had been created but before users reported them.

- Fake accounts restricted after having been reported by LinkedIn’s users.

It’s that last one that affects end users negatively, because by then the burden of moderating the platform has been moved from LinkedIn to them.

So how bad is it? In 2022 and 2023, LinkedIn users reported about double the amount of fake accounts compared to previous years.

That’s 391 thousand fake accounts in 2022, and 428 thousand in 2023. All of them reaching end users.

If we look at this five-year period, in 2023 there were 2.8 times as many user-reported fake accounts as there were in 2019.

It would be a disaster without automated systems

Part of successful content moderation on platforms with a lot of user-generated content, and social media platforms are all about UGC, is to safeguard the user base. That’s why it’s so important to block fake accounts.

LinkedIn has automated systems in place to detect and block fake accounts, but they are clearly not working all the time.

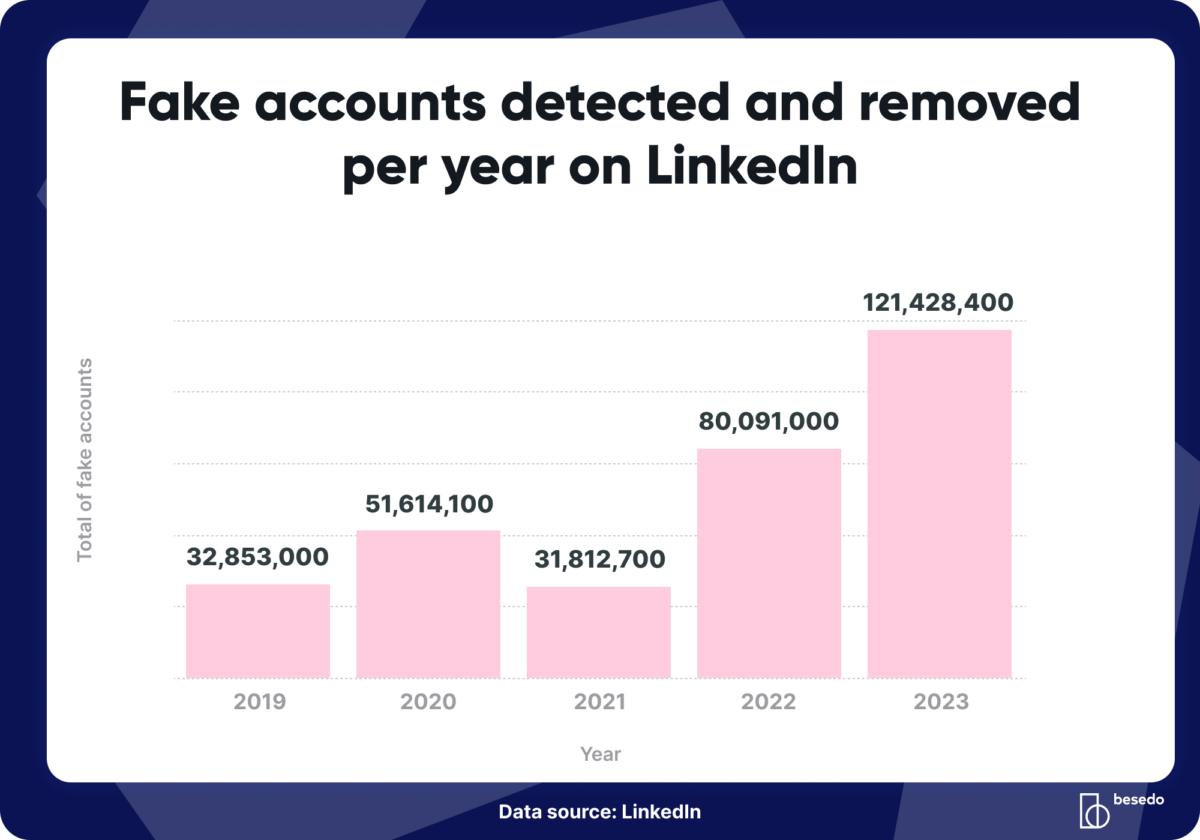

However, if there were no automatic detection in place, LinkedIn would be completely overrun by fake accounts. In 2023, LinkedIn blocked or removed more than 121 million fake accounts.

End users “only” had to deal with 428,000 of those.

To put those numbers in perspective, let’s take something we have data for: LinkedIn’s EU user base. In the second half of 2023, the average monthly active user base on LinkedIn in the EU was 47.9 million people.

The total number of fake LinkedIn accounts blocked in 2023 were the equivalent of two-and-a-half times the entire LinkedIn EU user base.

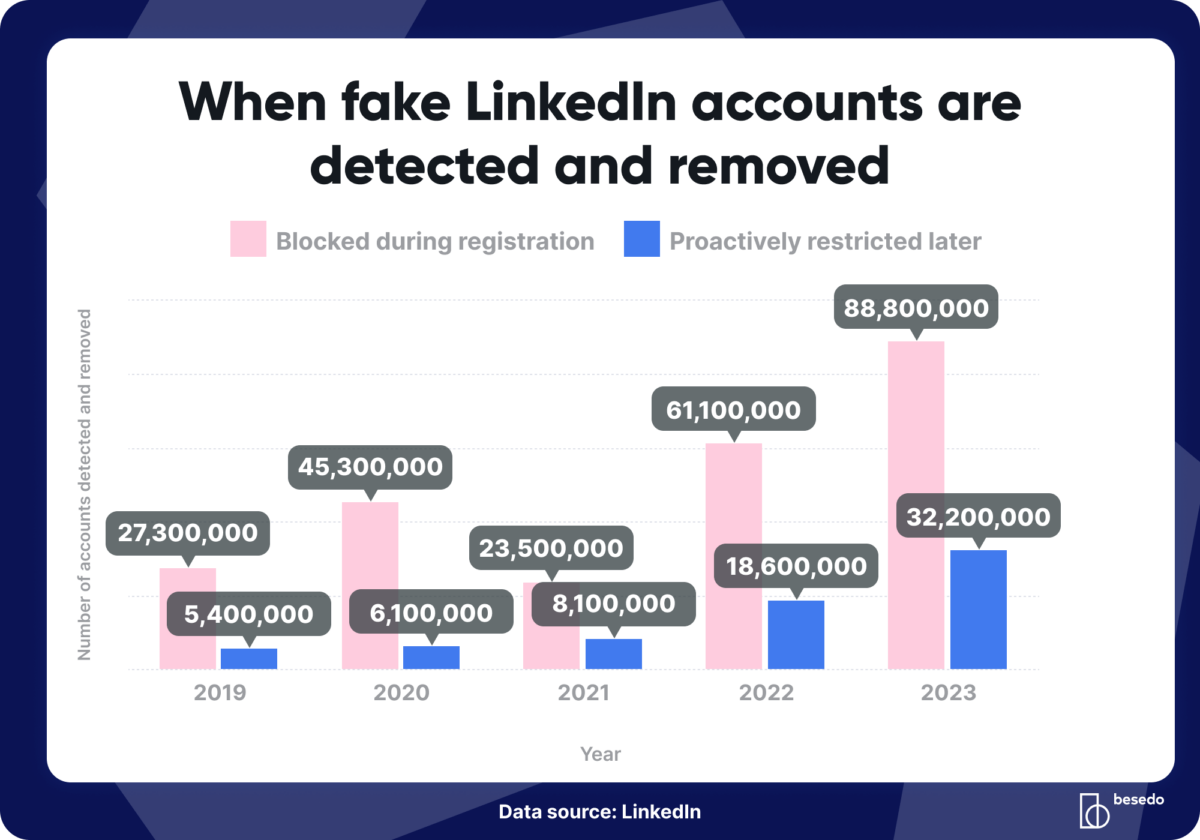

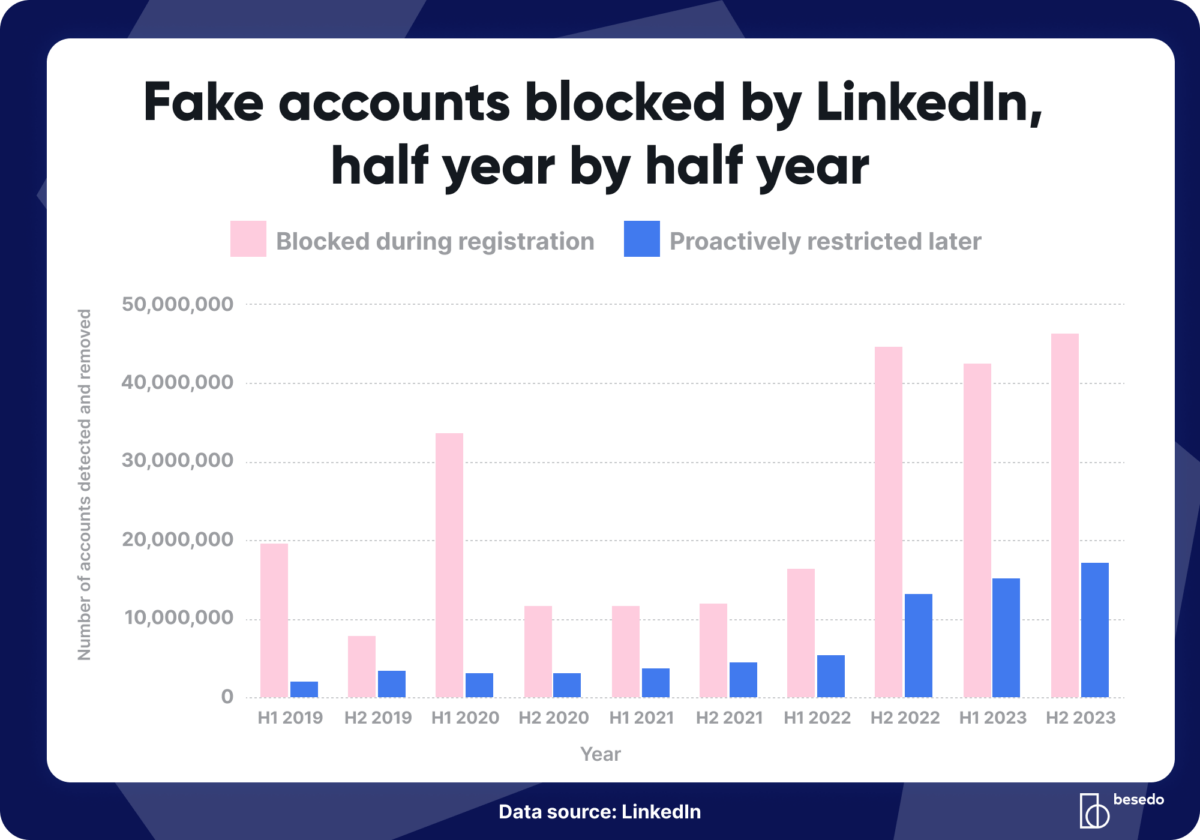

If we split the numbers into accounts blocked at (attempted) registration, and the ones that are restricted proactively later by the moderation team (before end users report them), you get the following breakdown.

While LinkedIn’s own moderation processes block the majority of fake profiles early on, the sheer volume means that even a tiny sliver amounts to hundreds of thousands of fake accounts.

And that, of course, is far from ideal. It’s a very challenging problem at scale, but end users should never have to be your warning system.

How effective is LinkedIn at stopping fake accounts?

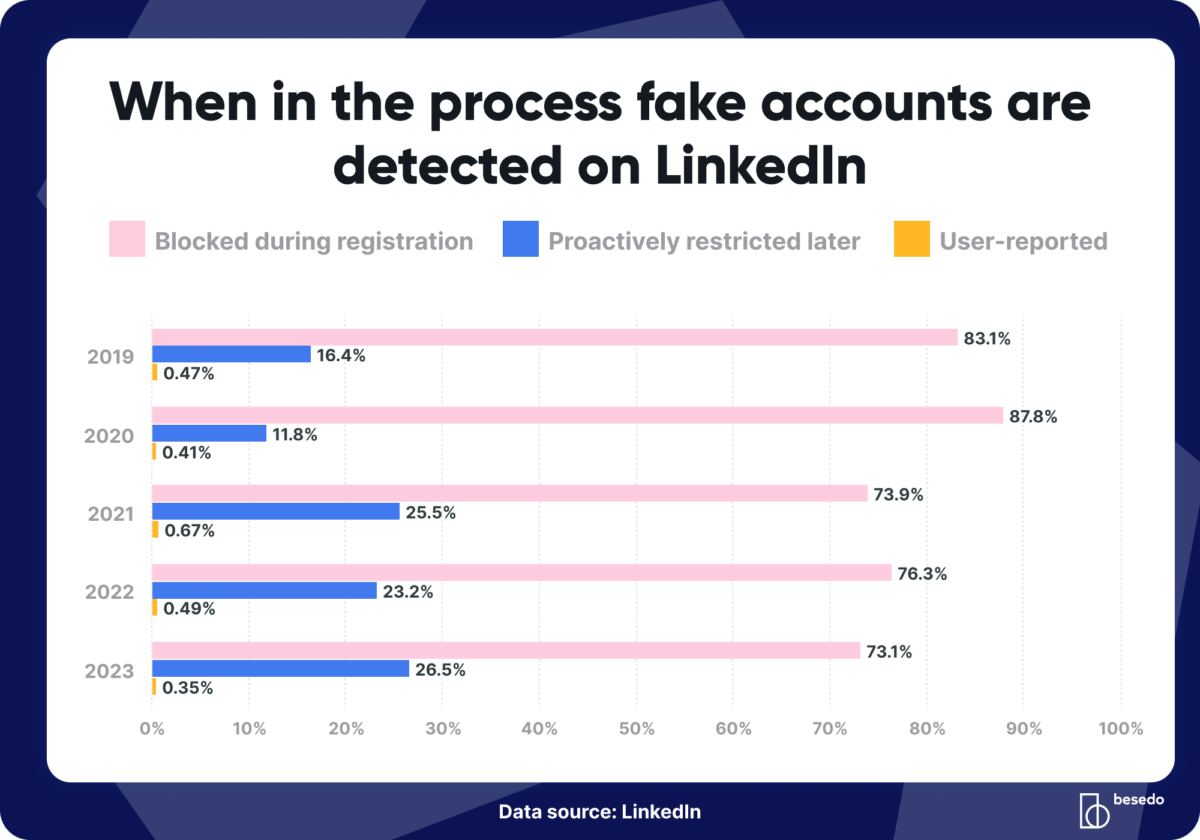

If we look at the numbers year by year, we see that a larger proportion of fake accounts have managed to get past the registration process recently, starting in 2021.

The past three years, about one in four fake accounts got through the first line of defense.

Even though the share of fake accounts reported by users was lower last year than previously, since the number of fake accounts coming in is so large, the absolute number of user-reported fake accounts still went up.

We should add that this assumes we are looking at the complete picture. There are likely some fake profiles that either never get detected, or stay live for a long time.

Why fake accounts are dangerous

They are rarely used for anything good, are they? Fake accounts and profiles can be used for everything from fraud and scams to things like disinformation campaigns. And of course, their mere presence can worsen the user experience significantly.

Imagine you’re a state trying to sway opinion on a subject important to your politics or world standing, or if you want to sow division and increase tension in other parts of the world. Nothing is more valuable than an army of accounts that can regurgitate propaganda and disinformation as if it were coming from real people.

This applies not just to state-sponsored propaganda but also special interest groups, terror organizations, and things like criminally, politically or ideologically motivated groups who want to deceive and confuse with false or misleading information.

Remember troll farms? This is one of their weapons to manipulate opinion at scale. But it’s also a common way for scammers to find new victims.

How fake accounts are used depends on the platform, but it should always be a priority to detect and remove them as fast as possible. They can seriously hurt both trust and credibility.

When did fake profiles start increasing and why?

If we zoom in a bit it becomes obvious that something changed in the second half of 2022.

Timing wise, we do find it interesting that ChatGPT launched in November 2022, and it was the year LLMs really broke through. Is that one of the factors?

Very likely.

The rise of generative AI has made it way easier to automate interactions online (which we can see even from legitimate accounts with AI comment spam). It simplifies the creation of fake accounts, fake profile pictures, and content en masse.

That only helps us answer the question of how (maybe), but not the who or the why. Only LinkedIn has the data to answer those questions properly.

The reasons and perpetrators likely change over time, but Brian Krebs (of Krebs on Security fame) had some interesting observations back in 2022 about fake accounts on LinkedIn and possible reasons why, such as fraud and various scams.

LinkedIn posted, also in 2022, a blog post warning its users to be careful, and how to spot fraudulent activity on its platform.

On a side note regarding that final chart, it’s interesting to see that there was also a significant peak in the first half of 2020, coinciding with everything from the rise of the Covid-19 pandemic to Brexit.

We suspect that LinkedIn’s content moderation team has a few interesting stories to tell.

Data sources: Numbers collected from LinkedIn’s community reports for 2019 through 2023, and some additional calculations by us. We also lifted some numbers from LinkedIn’s most recent DSA transparency report. For the curious, these are interesting reports in general and it’s good to see such transparency about important matters to the LinkedIn community.

P.S. If you’re wondering what this DSA thing is, we got you covered.

Ahem… tap, tap… is this thing on? 🎙️

We’re Besedo and we provide content moderation tools and services to companies all over the world. Often behind the scenes.

Want to learn more? Check out our homepage and use cases.

And above all, don’t hesitate to contact us if you have questions or want a demo.