Contents

Last week Instagram and Threads pulled a “Thanos snap” on their users, making posts and accounts disappear 🫰 Well, plot twist — it turns out it wasn’t an AI gone rogue but a human error.

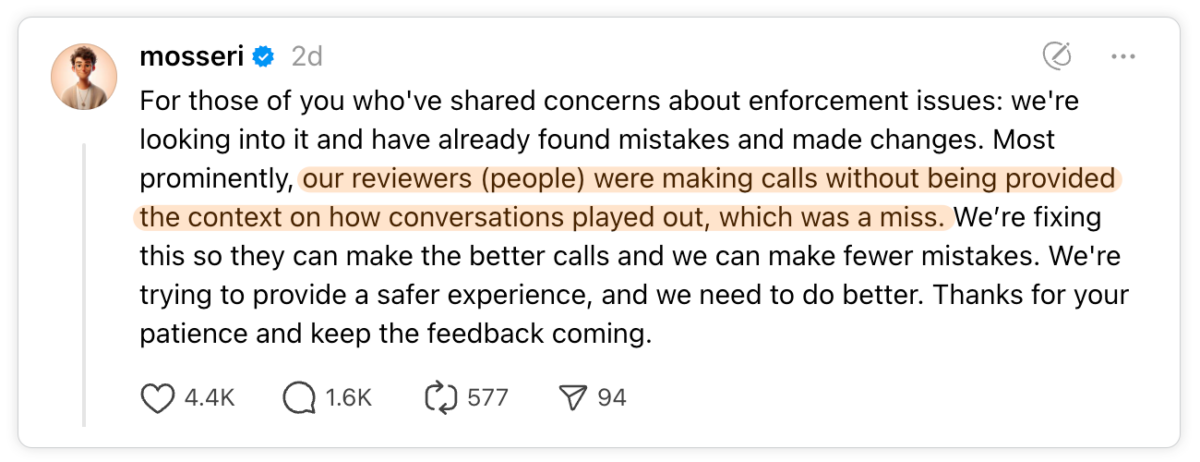

Instagram’s recent moderation meltdown saw posts disappear, accounts get the cold shoulder, and probably hectic days for the good people at Meta. Adam Mosseri, Instagram’s head honcho, revealed it wasn’t their AI going berzerk; it was human reviewers making calls without the full picture.

There was a whole thing on TechCrunch about it.

Who is to blame, really?

It’s easy to point fingers, and while AI moderation tools are getting better every day, they’re not perfect. Getting the proper context is key for both humans and AI. Imagine trying to understand a complex debate by only reading one sentence—you’re bound to miss key details, right? And that’s what happened here.

Automation needs a human-in-the-loop

The secret sauce for any successful content moderation system is striking the right balance between AI and human judgment. AI is fantastic at sifting through massive amounts of data, catching obvious violations, and flagging suspicious content. But it’s only as good as the humans who train it.

In AI content moderation, context is the key to making precise, responsible decisions. An accurate solution needs to address this challenge by considering the full context at multiple levels: the word, the sentence, and the entire document. Furthermore, multimodal models combining different input types—text, images, and metadata- are crucial for moderating content in today’s world, where communication happens simultaneously across multiple formats.

For example, a post might include text that is fine on its own, but an accompanying image or a specific metadata tag could alter its meaning entirely. By aggregating and interpreting these inputs, the AI system can form a more holistic understanding of the moderated content.

- Train AI with Human Insights: AI should, of course, handle the heavy lifting but use human expertise to teach it the finer points of context and nuance.

- Keep Humans in the Loop: Always let human moderators review edge cases and help refine AI decision-making.

- Continuous Learning: AI and human moderators should constantly learn and adapt. For the times, they are a-changin’ — and fast.

- User Feedback Matters: Listen to your community. They’re the ones experiencing your platform firsthand.

“Effective AI content moderation requires understanding the full context—at the word, sentence, and document level—while leveraging multimodal inputs like text, images, and metadata. By capturing nuances such as tone, intent, and format, our solution ensures more accurate and fair moderation decisions,” says Syrine Cheriaa, our Lead DSR at Besedo.

A lot of responsibility is on the content analysts and human moderators to remain vigilant. Mistakes can, and will, lead to user frustration and if you have a big user base it’ll lead to big PR disasters. Wrongly flagged or ignored content will drive users away, creating trust issues between the platform and its community.

For those in the back, one more time: technology is here to enhance human decision-making, not replace it entirely.

“AI and rule-based automation are incredibly efficient at scaling moderation, but the human touch is needed to some extent so that we don’t lose sight of context and nuance. When you can combine the strengths of both, you reduce mistakes and protect your platform’s reputation,” adds Axel Banér, Chief Commercial Officer at Besedo.

Here at Besedo, our approach integrates AI and human expertise such as content analysts, data linguists and analysts, to make sure content is reviewed accurately.

While you might not be as big as Instagram and Threads, you still want what is best for your users and their experience.

Ahem… tap, tap… is this thing on? 🎙️

We’re Besedo and we provide content moderation tools and services to companies all over the world. Often behind the scenes.

Want to learn more? Check out our homepage and use cases.

And above all, don’t hesitate to contact us if you have questions or want a demo.