Contents

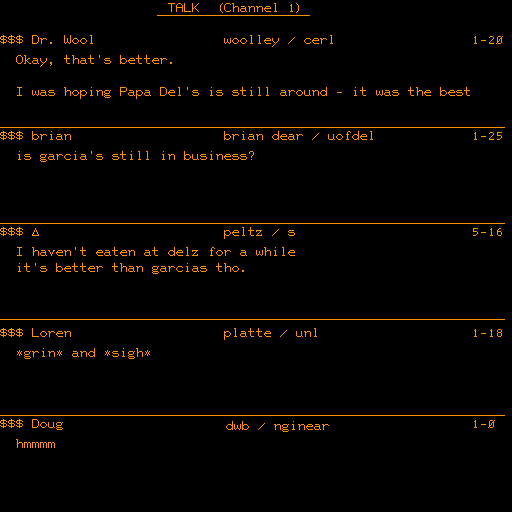

Do you remember the early days of Talkomatic? It was the first online chat system launched in 1973. Since then, our preferred mode of interaction has shifted from real-life conversations to keyboards, emojis, and initialisms like LOL.

So, what captivates us about the ability to chat with random people from all corners of the globe? How do these chats affect our self-esteem, and what are the consequences when talking to a stranger goes wrong?

It is safe to say that the internet has stirred things up and opened up a whole new world to explore for anyone interested in human interaction. One might even say that the internet exists solemnly to connect us. When a group of developers created Talkomatic over 50 years ago, it was the catalyst for what would become the new standard for interactions.

Online chats – the origin

Just two years before Talkomatic, the first email was sent. In 1971, Ray Tomlinson “sent several test messages to himself from one machine to the other,” he recalls. The chat, however, was something else. An online chat was a conversation in real-time, and it took online interaction as close to a real-life conversation as possible. With a chat, you didn’t need to wait for the respondent to open the email, read it, and send a response, which could take days.

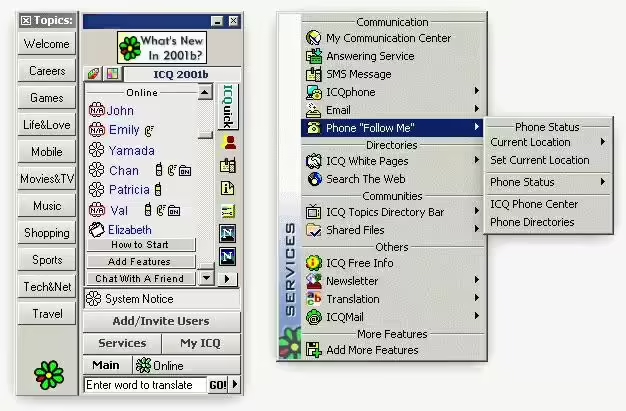

Talkomatic was groundbreaking, but it would take another 20 years before the online chat was widely adopted, and even then, most people still needed to own a home computer. Online chat was pioneered in the early Internet era as the IRC protocol was released, and a few years later, we got to know ICQ with its annoying “uh-oh” messaging sound.

For the first time, getting to know people from across the globe was literally just a matter of pushing some buttons. Today, interacting on Messenger, WhatsApp, and via SMS is a given in most people’s social lives.

People behave more extreme online

Even though chatting online is in many ways safer than interacting in real life, not least since you have some distance from the person you are interacting with, you can be sure that this article will shed some light on how dangerous it can be. First and foremost, let’s look at what online chats do to our long-term well-being. A study involving University of Pennsylvania students discovered that higher social media usage resulted in heightened feelings of isolation and depression. But what about online chatting, more specifically, that can be harmful?

One significant reason online chatting can be risky is that people often feel more inclined to behave worse online than in real life. Some individuals intentionally spread misinformation or cause shock and distress, sometimes just for amusement. This behavior is frequently called “trolling,” which can be misleading by suggesting merely annoying but harmless pranks. In truth, some internet “trolls” engage in conduct that escalates into harassment or even abuse.

Psychological research has also identified the “online disinhibition effect,” a phenomenon where people are less likely to restrain negative behavior when interacting online. The associated research paper outlines six factors that reduce people’s reluctance to harm others online: anonymity, dissociative identity, and the minimization of authority.

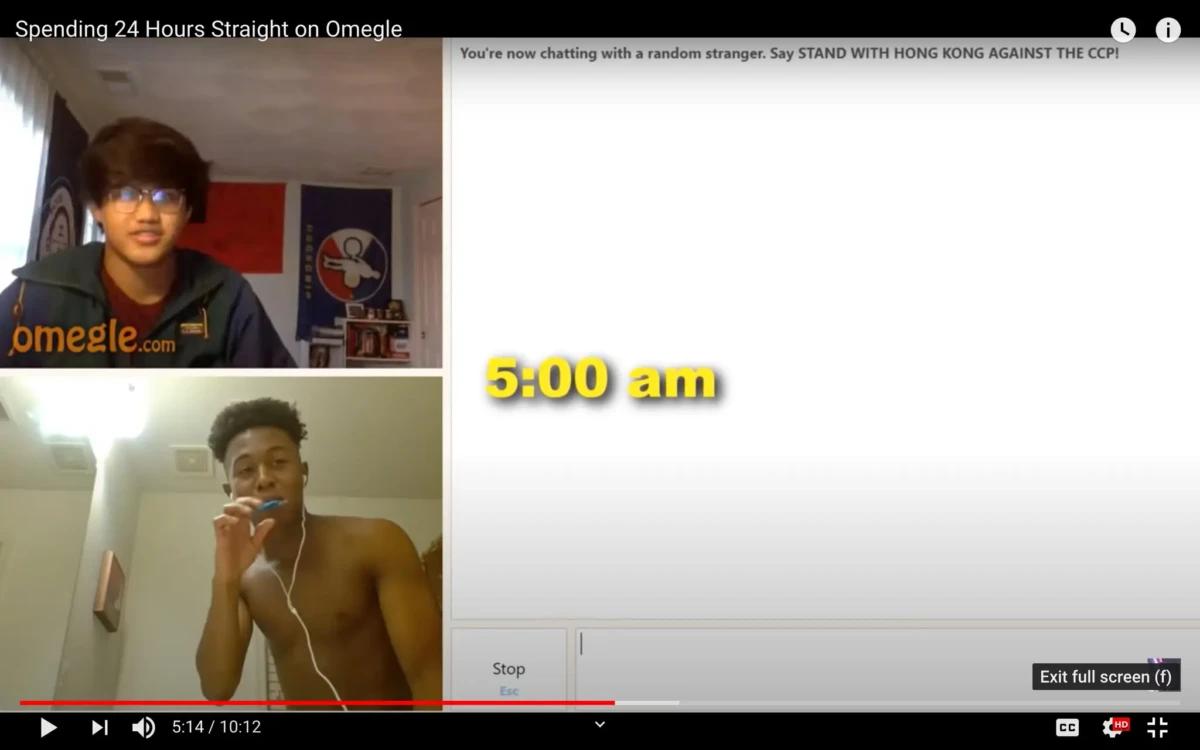

Chatroulette and Omegle Chat

Released in 2009, Omegle was an online chat platform that enabled users to connect and socialize without requiring registration. It randomly matched users for one-on-one chat sessions, allowing them to communicate anonymously. Both Omegle and Chatroulette are perfect examples of what happens when you combine anonymity with the lack of content moderation.

There was no age restriction for a long time, and users as young as 13 could use Omegle with parental consent, which wasn’t very safe.

It would take several years before Omegle implemented a so-called profanity filter to prevent minors from being exposed to sexual content. By then, it was too late. In 2022, Omegle was on life support due to a 22-million-dollar lawsuit filed by an Oregon user who became a victim of sexual exploitation. Omegle shut down in 2023, and its legacy will forever be associated with male genitalia and sexual content.

As technology developed, we have seen everything from chats focusing on speech (oh yes, we all remember the Clubroom hype) to Roblox—an online platform and game creation system where users can design, share, and play games created by other users in various genres.

MSN Messenger

MSN Messenger (also known colloquially simply as MSN), later rebranded as Windows Live Messenger, was a cross-platform instant-messaging client developed by Microsoft.

Let’s not forget the massive popularity of MSN Messenger (which later became Windows Live Messenger). Sitting and waiting for your best friend (or the classmate you were secretly in love with) to log in, and then send your favorite song, chatting for hours, sending nudges and changing your “display name” to a secret message, or why not a catchy lyric from your favorite song at the time?

Most people might have good memories of MSN Messenger, based on “invites,” but you were only connected to people you knew. However, already at this time, fraud and sexual predators found their way into unsuspected users’ MSN lists.

The service was discontinued in 2013 and was replaced by Skype.

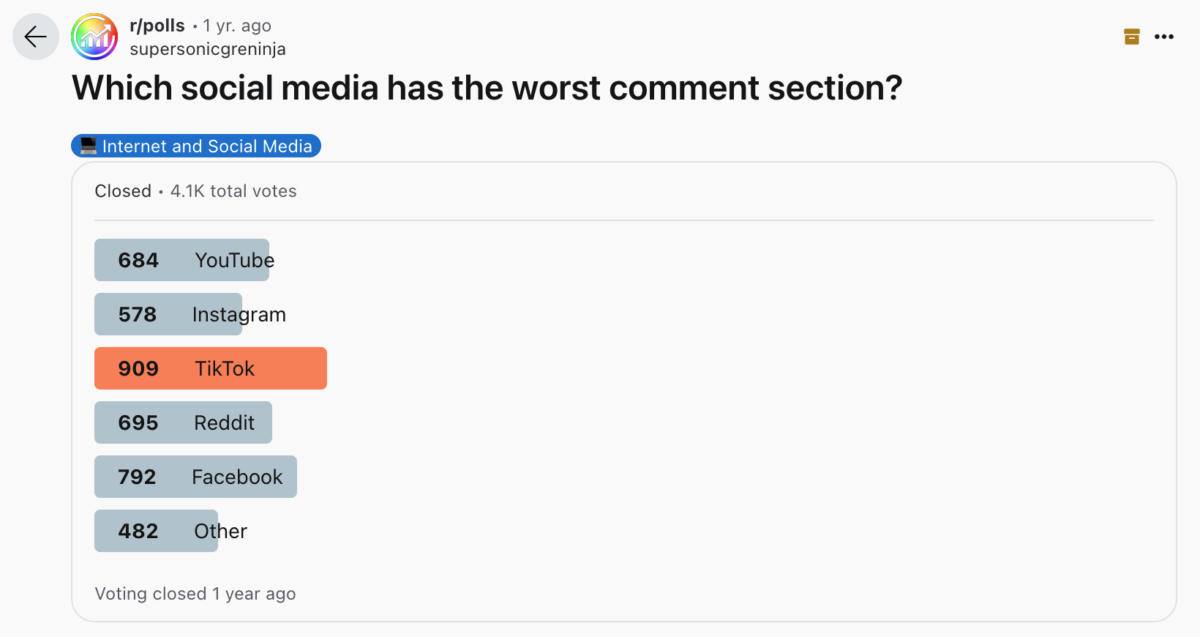

Comment sections

Not chats in the real sense of the word, but comment sections do function as a way to send messages. Comment sections can go bad quickly if left unsupervised. In 2014, the biggest newspaper in Sweden, Aftonbladet, closed its comment section forever (officially, it was not due to toxic content but for technical reasons, but an educated guess is that this decision made many worried people sleep better at night). And many newspapers have followed since.

And since all the major news sites have already shared their news on Facebook, people have now turned their focus to the comment sections connected to the news push on Facebook instead. And truth be told, it only meant closing down one toxic platform and transferring it to another. Anyone who spends time on Facebook can testify to the tone in some, if not most, comment sections.

According to a poll on Reddit, TikTok has the worst comment section, followed by Facebook and YouTube in third place. Not surprisingly, many people are drawn to reading negative comment sections, which is detrimental to our mental health. Numerous individuals have reported adverse effects from this exposure, including depression, increased anxiety, and reduced attention spans.

According to a study from UGA, reading online comments can also impact peoples’ perceptions – perhaps good news for some politicians but bad news for anyone trying to avoid fake news.

Your company reputation at risk

In the case of Omegle, it is clear how online chats can be the downfall of the company’s reputation. But what about companies where the chat is not merely the purpose? What about companies allowing other user-generated content, such as reviews and forums? Online chats have a lot in common with the abovementioned issues regarding how they can impact a company’s reputation: toxic content will thrive with user-generated content.

As BusinessWire suggests, nearly 50% of Americans say they lose trust in brands if exposed to fake or toxic user-generated content on their channels. The most commonly encountered unwanted content comprises spam (61%), fake reviews and testimonials (61%), and inappropriate or harmful images (48%).

Conversely, when a brand engages with a customer online (e.g., liking a social media post or responding to a review/comment), it can positively affect their relationship with that brand. Over half (53%) stated it makes them more likely to purchase from the company again. In comparison, 45% said it encourages them to post more user-generated content (UGC) and increases the likelihood of recommending the brand.

83% of Americans surveyed indicated they have posted UGC, most frequently images (52%), reviews or testimonials (51%), and comments in forums/social/online communities (44%). As it turns out, brands that employ social listening and community management practices and address unwanted content will have a more substantial chance of positively impacting the online customer experience.

Content moderation in chats

Content moderation involves monitoring and filtering user-generated content on websites, social media platforms, forums, and other online channels. This process includes reviewing, editing, and removing inappropriate or harmful content to ensure a positive user experience. Historically, human content moderators have made this decision manually. Still, they have increasingly replaced it with artificial intelligence.

According to Digital Doughnut, a trained human moderator takes around 10 seconds to analyze and moderate a comment in a comment section. However, with AI models, this is not an issue at all.

Latency

Having content moderation to ensure your users experience a chat free from harassment and fraud is good practice and business. Sure, latency kills, as the gamers say, but that was a different time, and with today’s tech, we can make sure the conversations run smoothly.

On average, our real-time moderation API takes just 71 milliseconds to decide on message content.

By combining technology and human moderators, you can maintain a healthier digital environment free from toxic content such as spam, hate speech, fake news, explicit material, and other undesirable content.

Content moderation protects brands from potential legal issues and safeguards their reputation. Today, when information spreads faster than ever, effective content moderation practices are crucial for businesses aiming to build trust with their audience while reducing the risks associated with harmful online behavior.

We have a blog post about different content moderation methods. It will help you understand how content moderation can benefit your specific brand and business. Better yet, just send us a message, and we’ll chat about it—no strings attached. And no latency, either.

Final thoughts

Effective content moderation is not just a necessity but a cornerstone for maintaining trust, safety, and positive user experiences in the digital world. By learning from past mistakes and implementing robust moderation practices, we can ensure that online chats continue to serve as a valuable tool for communication, connection, and community building instead of showcasing the worst sides of humans.

Ahem… tap, tap… is this thing on? 🎙️

We’re Besedo and we provide content moderation tools and services to companies all over the world. Often behind the scenes.

Want to learn more? Check out our homepage and use cases.

And above all, don’t hesitate to contact us if you have questions or want a demo.