A better dating experience with the power of content moderation

Keep your dating app safe and clean with content moderation. This will protect users from harmful or inappropriate content and create a positive environment for meaningful connections.

Let Besedo handle inappropriate content so you can continue to do what you do best: connect people who want to fall in love.

We have optimized inappropriate content removal, reducing support requests by 80%

Ozan Yerli, Founder and CEO of Connected2Me

Flirt Safely. Date Happily!

Roll up, roll up to the grand carnival of digital love! As a dating app maestro, you orchestrate a glorious symphony of love stories where the laughter rings louder than the ding of scam alerts.

Content moderation for dating sites and apps involves screening user-shared images, videos, texts, and audio.

Let’s keep the digital dating sphere a place for butterflies in the stomach, not knots of concern.

“We completed the implementation within two days. Our users told us they noticed a difference even on our first day with Besedo.”

— Ozan Yerli, CEO and Founder

Active love – Active safety

Protecting user data and fostering respectful interactions are key to any success. Besedo implements machine learning models and filters to identify, flag, and remove harmful content and profiles instantly.

Choose from pre-built filters or train your own Besedo AI to detect users and behaviors that are unwanted. Detect nudity, remove hate speech, underage users, and much more.

Learn more about our use cases →

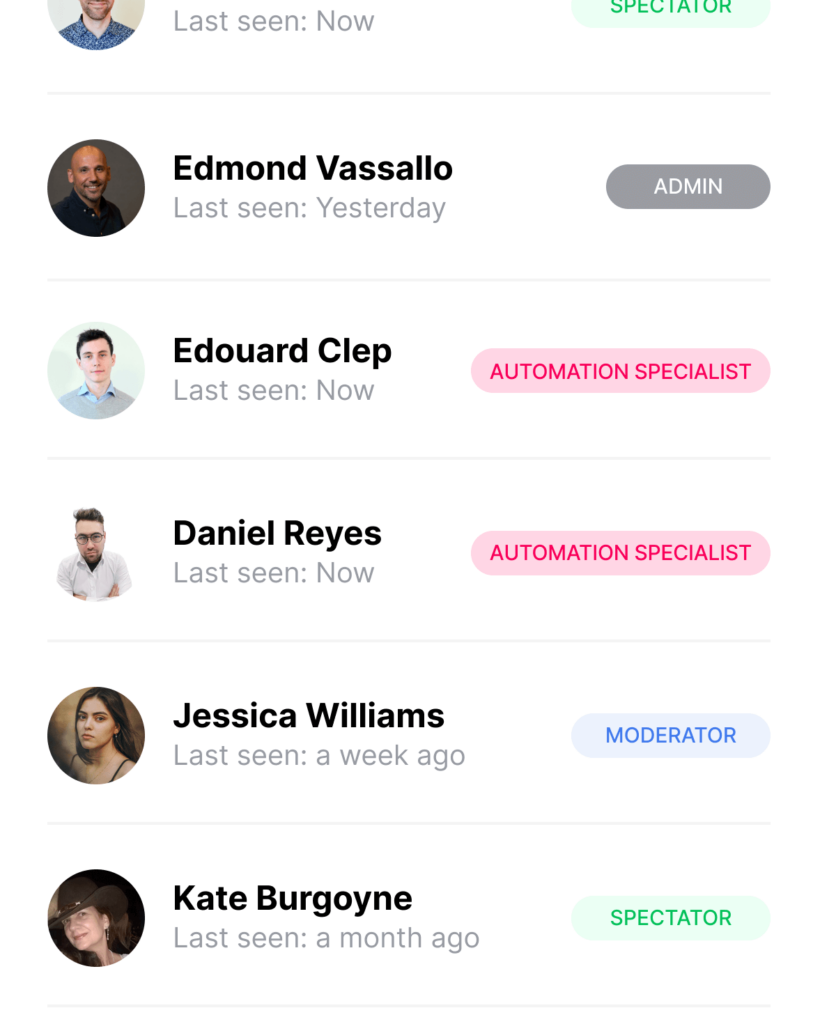

Teamwork makes the dream work

Set up individual accounts for your entire team so you can collaborate. Forget tinfoil hats – we’re in the business of no-nonsense security.

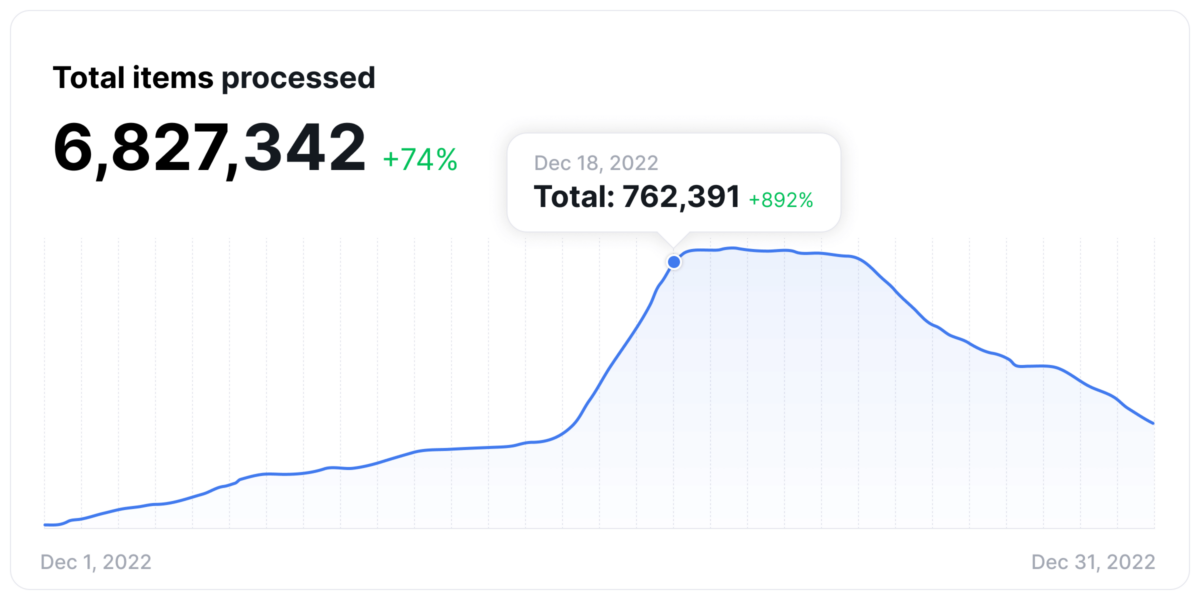

Content moderation that scales with you

Besedo scales effortlessly with spikes on your dating app so you can handle even the most challenging times.

No need to worry about staffing to make sure your platform is moderated in real-time.

Everything has been taken care of.

Trusted by some of the world’s biggest dating apps

Content moderation without killing the mood

We want content moderation to enhance your users’ experience and help them find their special one more easily. Remove fake profiles and ensure everyone can chat fearlessly and fall in love.

Eliminate nudity and prostitution

Discover inappropriate content and solicitation before they reach your users. We can also differentiate between explicit and suggestive content.

Detect bullying and harassment

Use real-time content moderation to protect users and balance content freedom and safety. Your app should be safe and enjoyable for all.

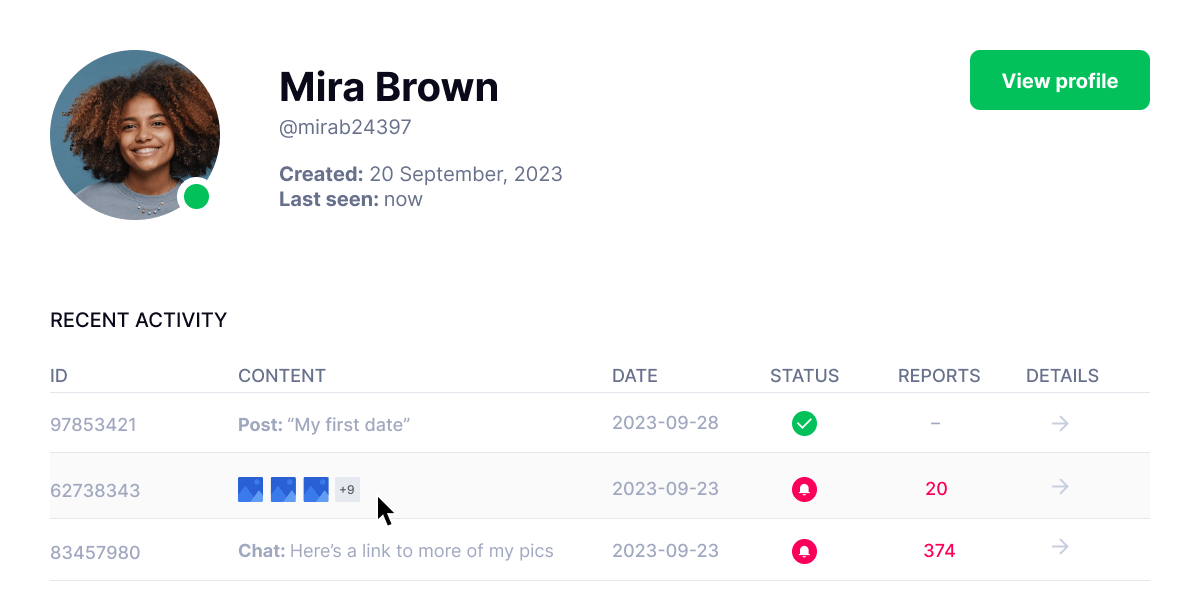

Prevent fake or underage profiles

Catch fake profiles and catfishing attempts. You can protect both minors and adults, comply with legal regulations, and secure your platform.

Case study: Connected2.me optimized inappropriate content removal, reducing support requests by 80%

Learn how Besedo’s content moderation helped reduce support requests by 80% – Boosting accuracy, user trust, and overall user experience.

Talk to our experts

- Powerful customized solutions combining AI & humans

- Unrivalled innovation based on over 20 years of experience

- Global support and multilingual moderation

- Easy API integration through RESTful HTTP

- User-friendly and powerful control panel