Contents

There’s a big internet regulation moving through the American Congress right now. It’s called KOSPA—the Kids Online Safety and Privacy Act—and in the summer of 2024, it passed the Senate with strong bipartisan support. If it becomes law, KOSPA could really shake things up for tech platforms, especially when it comes to how they handle online speech to make the internet safer for minors. Needless to say, it’s a controversial bill with a lot going on.

Now, we know what you’re thinking: “Another internet law? Yawn.” But hold up because this one’s a real game-changer, especially when it comes to how tech platforms handle online speech and safety for the younger crowd. And honestly, it’s stirring up quite the debate.

TL;DR on KOSPA and COPPA 2.0

- KOSPA (Kids Online Safety and Privacy Act): Aims to make the internet safer for minors.

- COPPA 2.0: An update to the Children’s Online Privacy Protection Act.

Both have strong bipartisan support and are likely to become law soon. Here’s what you need to know:

- Age limit increase: COPPA 2.0 raises the protected age to 17 (up from 13).

- Ad restrictions: Say goodbye to targeted ads for minors. This is reminiscent of the Digital Services Act in the EU.

- Platform accountability: Introduces a “duty of care” for online platforms.

The censorship conundrum

Here’s where things get tricky. The broad scope of KOSPA has some serious implications for how you moderate content. The fear is that in an effort to protect kids, platforms might go overboard and start sanitizing content to the point where the richness of the internet’s discourse gets lost.

For trust and safety teams, this means walking a tightrope: How do you create a safer environment for minors without alienating adult users or outright stopping important, albeit controversial, conversations?

It’s’ a question without easy answers but will be at the forefront of your strategy discussions.

Understanding the reach

When considering the impact of KOSPA and COPPA 2.0, it’s important to understand just how significant the under-17 demographic is on major platforms like YouTube, TikTok, and Snapchat. Ask just about anyone in that demographic if they use these apps, and aside from giving you a big ol’ rolling of the eye, you’ll soon understand that all these platforms serve millions of younger users daily.

The proposed legislation aims to protect this vulnerable group. Still, the changes required could have sweeping implications for how content is curated, moderated, and delivered.

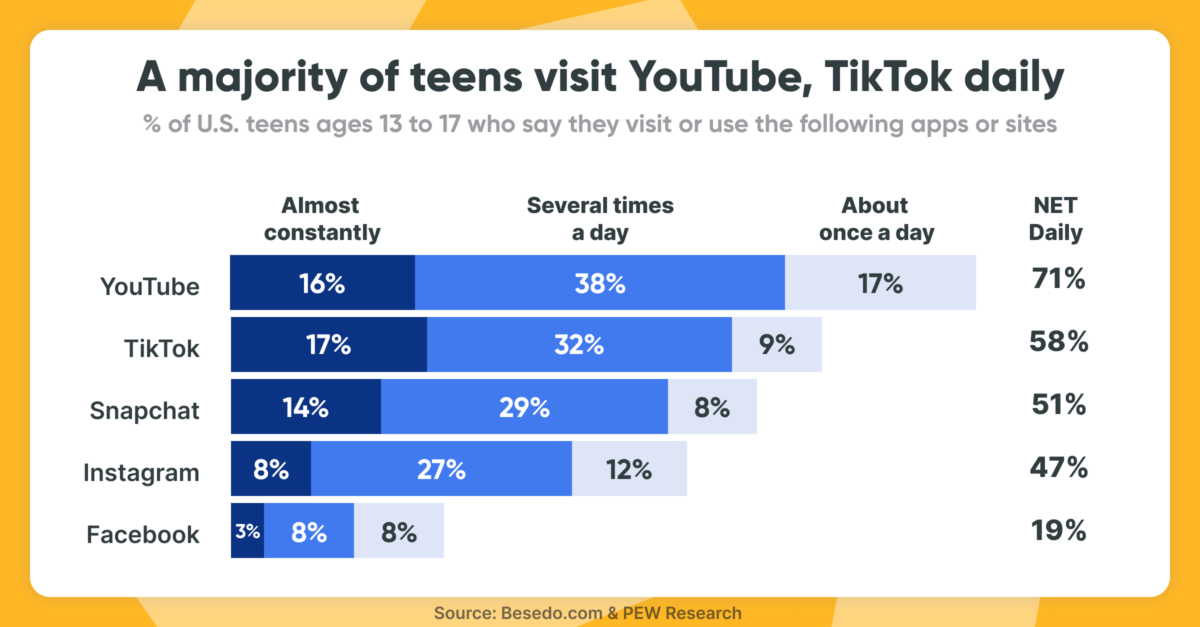

The Pew Research Center published its findings on US teens and their social media habits in late November 2023.

According to the survey, YouTube is the most popular platform, and teens visit it regularly. In fact, around 70% of teens say they use YouTube daily, with 16% admitting they’re on it almost nonstop.

Similarly, TikTok has a large following among teens, with 58% saying they check it daily. Of those, 17% describe their TikTok habits as nearly constant.

Snapchat and Instagram are also part of many teens’ daily routines, with about half reporting daily use. However, more teens say they’re glued to Snapchat compared to Instagram, with 14% using Snapchat almost constantly versus 8% for Instagram.

Why should platform owners care?

Well, first of all, this isn’t just a “trust and safety” issue; it’s a platform-wide challenge. As platform owners, the implications of KOSPA and COPPA 2.0 go beyond compliance. These laws could reshape user engagement, content strategy, and even your business model.

If platforms start playing too safe, you risk losing what makes your platform valuable to users: a space for diverse, sometimes edgy, but always meaningful interactions. It’s about finding that sweet spot where safety and free expression coexist—without tipping too far in either direction.

Our CEO, Petter Nylander, emphasizes, “These new regulations are not just a hurdle—they’re an opportunity. By leading the way in creating a balanced approach to online safety, we can set the standard for the entire industry, ensuring that our platform remains both safe and engaging for all users.”

Balancing protection and freedom: The Pros and Cons

As previously mentioned, KOSPA and COPPA 2.0 are designed to enhance the safety and privacy of minors online, but they come with significant trade-offs that must be carefully considered.

Pros: These acts offer much-needed protection for marginalized teenagers, such as LGBTQ+ youth, who are often exposed to harmful content or targeted by predators. By creating stricter regulations and holding platforms accountable, these laws could provide a safer environment for vulnerable groups.

Cons: Critics argue that the broad scope of these regulations could lead to over-censorship, stifling free speech, and limiting access to critical resources that marginalized teens rely on for support and community. This tension between protecting young users and preserving the internet’s open nature is undoubtedly at the heart of the debate.

What’s next for Trust & Safety teams?

With bipartisan support in US politics pushing these bills closer to law, the clock is ticking for trust and safety teams to adapt. This isn’t just about reacting to new regulations; it’s about being proactive. Start by revisiting your content moderation policies, investing in advanced age verification technologies that respect privacy, and thinking about how you can maintain a community while meeting these new obligations.

Of course, this is all easier said than done. We are happy to discuss how you can achieve this.

Legal challenges are almost a given, especially around First Amendment concerns. It won’t hurt to start a conversation about this today. Make sure you are well-informed and ready to take action and talk to your users.

Also, partnering with a content moderation service like ourselves will make sense. Hashtag shameless plug.

Final thoughts

This is more than just a compliance issue—it’s a chance to lead the charge in creating a safer, more thoughtful internet for everyone. How are you preparing for KOSPA and COPPA 2.0? What challenges do you foresee, and how will you address them? Drop us a message, and we’ll gladly talk and provide guidance on this, no strings attached.

Ahem… tap, tap… is this thing on? 🎙️

We’re Besedo and we provide content moderation tools and services to companies all over the world. Often behind the scenes.

Want to learn more? Check out our homepage and use cases.

And above all, don’t hesitate to contact us if you have questions or want a demo.