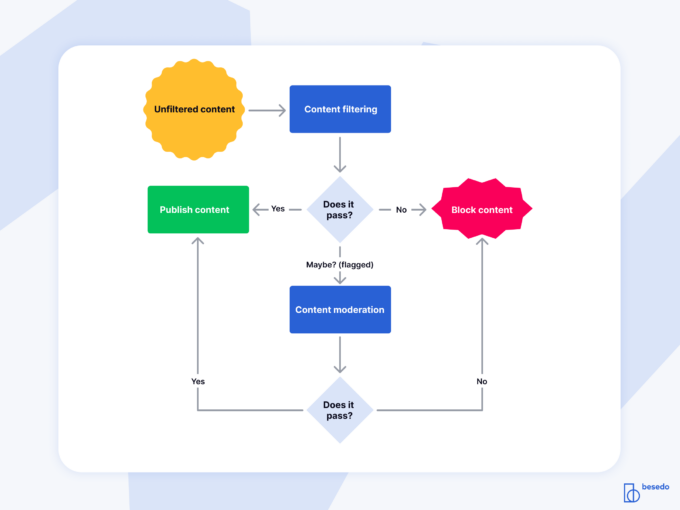

Content Filtering vs Content Moderation: The Key to Scaling

Content filtering is only part of the content moderation process, but it’s an important gatekeeper that allows platforms to scale. Let’s have a closer look.

Moderation challenges for social platforms – An interview with Deborah Lygonis founder of Friendbase

Coronavirus Scams: How To Protect Your Users

3 ways personal details can damage your marketplace

How To Create Accurate Content Moderation Filters That Work

The man behind our industry’s most accurate filters