Contents

Over the past year Facebook has been in the news multiple times regarding their moderation processes.

Due to the social media company’s size and penetration in society, there have been some very public reactions to their content moderation approach whenever it has been revealed. For those of us who rely heavily on professional content moderation processes to ensure as smooth a user experience as possible, these incidents have been great case studies.

So what can we learn from Facebook’s moderation approach and the public backlash we have seen on several occasions during the past year?

Let’s have a look at a couple of noteworthy moderation incidents that caught the public attention.

Managing fake news or letting them manage you?

Last year in late August, Facebook made headlines for firing the team that was curating stories for their trending news module.

They replaced the human team with an AI that was supposed to have learned from the decisions taken by the curators. The change came after accusations that the curating team was not objective in their choices.

But once the human team was replaced Facebook faced an even worse problem. The algorithm was distributing fake news. The Fake news issue grew over the month to come and escalated during the US presidential election. Some even blame Facebook’s fake news issue for Donald Trump being elected president.

What we can learn:

AI and machine learning are powerful tools in managing and moderating content, but the algorithms and models need to be very well made for them to be efficient and most importantly they have to be accurate. Often the best solution for content moderation is a combination of an expert human team continuously feeding new data to the AI ensuring ongoing tweaks, adjustments and that the algorithms evolve in a good way.

Censoring the public or protecting users?

In the autumn Facebook faced a very public debate because of their moderation processes, when they removed the award winning Vietnam War picture “Napalm Girl” posted on the platform by Norwegian writer Tom Egeland. This led to a back and forth debacle with Norway’s largest newspaper Aftenposten and to a load of critical news coverage globally.

In the end Facebook had to issue an apology, allow the picture on their platform and amend their guidelines.

What we can learn:

Facebook argued throughout the ordeal that they removed the picture based on their moderation guidelines which do not allow nudity, especially not nude children. While this is of course a great baseline moderation rule to have in place, the incident showed that when it comes to grey area cases they often need to be discussed and decided upon on a case by case basis.

When you set up moderation guidelines, make sure you also provide your team with direction on what to do with grey area cases. It is also incredibly important that you have good internal communication established so moderators can easily escalate cases that are outside the norm. While you might not be able to completely eliminate objectionable decisions, you can at least limit them and if you follow the above you can prove that decisions for grey area content are taken with great care and reflection.

Learn how to moderate without censoring

Why moderating content without censoring users demands consistent, transparent policies.

Viral or violent videos?

In April 2016 Facebook launched its live feature allowing people to more easily share and create videos directly through the social media platform. While the feature was quickly adopted by Facebook’s userbase and the interest in Live continues to rise, it has also caused a number of issues for Facebook. Most damaging to their brand has been the amount of violent videos that suddenly flooded the platform spreading virally. In the end Facebook has been forced to acknowledge the issue and has hired a team of moderators to deal with the issue, but it took almost a year before the tech giant bent to the public demand and a lot of bad PR has happened in that time that could have been avoided with a quicker response.

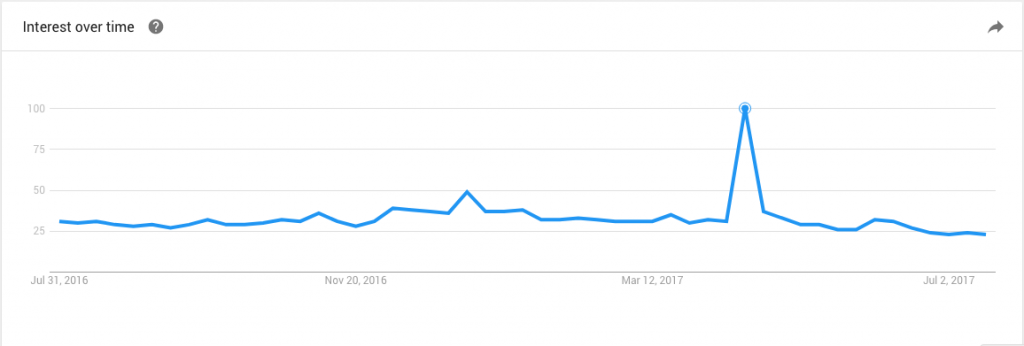

Graph showing the spike in Google searches for Facebook live after the Cleveland shooting April 16th 2017

What we can learn:

It has been argued that the Facebook live feature was rushed as the social media giant was falling behind other social networks in the video field. Whether this is true or not we can’t say, but it is clear that Facebook was not prepared to handle the amount and type of user-generated content that suddenly flooded the platform.

The takeaway for us should be to ensure that we have the workforce and tech in place and ready to scale when launching new features or a new site. This is an important lesson, for startups in particular, as growth can happen rapidly. It is important to put clear processes in place for UGC moderation when volumes can no longer be managed by the founders. Otherwise, a unicorn dream can quickly turn into a PR nightmare.

Leaked moderation guidelines.

You’ll have probably seen a few weeks back that Facebook’s content moderation guidelines were ‘leaked’. What quickly became apparent was that unless users decide to show their private parts or threaten to kill Donald Trump, a lot of other material will in fact be ok’d by its in-house moderation team.

Alright… it’s not that simplistic, but as the contradictory nature of some of the moderation rules illustrates, even a platform of Facebook’s magnitude is stumbling around in the dark when it comes to handling user-generated content.

The public reaction to seeing the guidelines were in stark contrast to the Napalm Girl incident, with many calling the rules too lax and condemning Facebook for allowing violent content to go live. Facebook on the other hand has claimed that in some cases violent content is relevant when it is part of a discussion around current events. Everything is about the context of the post as well. They allow pictures of non-sexual child abuse as long as the context shows that the post is against child abuse and not glorifying it.

What we can learn:

In the public eye the path between censorship and an approach that is too lax is narrow and it changes on a case by case basis depending on how it is presented in the media. The best way to protect yourself and your business against public outrage is to make sure you have clear, non-biased rules and policies in place for your content moderation.

Make sure that your moderation actions are as consistent as possible.

Also keep an eye on new trends. Previously innocent terms can take on nefarious meanings in the blink of an eye when the Internet culture grabs hold of it and twists it to its own purpose.

Putting Facebook moderation learnings to good use.

The main take away looking at the many Facebook moderation incidents over the past year is that moderation expertise, consistency, better internal communication and ensuring that processes and resources were in place could have gone a long way to minimize the public backlash and the recurring negative media coverage.

Those are valuable lessons to consider when running an online marketplace relying on user generated content.

Related articles

See allSee all articlesThis is Besedo

Global, full-service leader in content moderation

We provide automated and manual moderation for online marketplaces, online dating, sharing economy, gaming, communities and social media.