Contents

A playbook for online intermediary rules and accountability spans the entire EU market. A playbook unlocking seamless digital service opportunities across borders while prioritizing user protection everywhere in the EU. Sounds good, right?

Say hello to the Digital Services Act (DSA): a blueprint for a safer digital world. It sets safety standards for digital platforms, leveling the playing field and giving innovative tech businesses a boost to grow with trust.

Trust and safety on online marketplaces

80% of respondents in a Besedo survey said they have avoided purchasing a product due to concerns about the trustworthiness of a listing.

Is my platform affected by the DSA?

When you read articles from Brussels, there tends to be wording that isn’t super clear to everyone. But we will summarize here. The DSA includes rules for online intermediary services that millions of Europeans use daily, but what on earth do “intermediary services” mean?

Let’s try to sort this out in an easy-to-understand drawing from top to bottom. At the very top are the most known platforms, all the way down to the core infrastructure.

- Very Large Online Platforms (VLOPs): Online platforms with 45 million monthly users. More on them below, where we have a list of VLOPs.

- Online platforms: This refers to online marketplaces, sharing economy platforms, app stores, booking sites for travel, and, in general, websites and apps where people can share user-generated content.

- Hosting services: These include your web hosting services and cloud storage services.

- Intermediary services: These are your typical core services to get internet services at all. Examples include internet service providers, DNS providers, and domain name registrars.

The EU Commission has designated 19 Very Large Online Platforms (VLOPs) and Very Large Online Search Engines (VLOSEs):

VLOPs

- AliExpress

- Amazon Store

- Apple AppStore

- Booking

- Google Maps

- Google Play

- Google Shopping

- Snapchat

- TikTok

- Wikipedia

- YouTube

- Zalando

VLOSEs

- Google Search

- Bing

The platforms were designated based on the user data they had to publish by February 17, 2023.

Innovative online platform scaling-up in the EU

10,000+ platforms operating in the EU.

90% of those are small and medium-sized enterprises.

What does the DSA require?

This is what the DSA means for you and your platform:

- Rights for users: Users need to understand why content is recommended to them, with the freedom to say no to profile-based suggestions.

- Reporting tools: Users need to be able to easily report illegal content and trust platforms to take swift action.

- Limits on ad targeting: Sensitive data (like ethnicity, politics, or sexual preferences) is off-limits for ad targeting.

- Every ad needs to come with a clear label and the source of who’s behind it.

- Targeted advertising based on profiling towards children is not permitted.

- Easy to understand terms of service: You must break down your terms of service into plain language for all EU languages you serve.

How will the DSA impact online platforms?

All intermediary services, hosting services, and online platforms must meet the obligations outlined in the DSA and the table below by February 17, 2024:

| Obligations | Intermediary Services | Hosting Services | Online Platforms | Very Large Online Platforms |

|---|---|---|---|---|

| Out-of-court dispute settlement | ✔ | ✔ | ✔ | ✔ |

| Terms of service obligations | ✔ | ✔ | ✔ | ✔ |

| Points of contact and legal representative (where necessary) | ✔ | ✔ | ✔ | ✔ |

| Notice & action and obligation to provide information to users | ✔ | ✔ | ✔ | ✔ |

| Reporting criminal offenses | ✔ | ✔ | ✔ | |

| Complaint and redress mechanisms | ✔ | ✔ | ✔ | |

| Out-of-court dispute settlement | ✔ | ✔ | ||

| Trusted flaggers | ✔ | ✔ | ||

| Measure against abusive notices and counter-notices | ✔ | ✔ | ||

| Special obligations for marketplaces (ie compliance by design) | ✔ | ✔ | ||

| Bans on targeted advertising to children | ✔ | ✔ | ||

| Transparency of recommender systems | ✔ | ✔ | ||

| User-facing transparency of online advertising | ✔ | ✔ | ||

| Risk management obligations and crisis response | ✔ | |||

| External & independent auditing | ✔ | |||

| User choice not to have recommendations based on profiling | ✔ | |||

| Data sharing with authorities and researchers | ✔ | |||

| Codes of conduct | ✔ | |||

| Crisis response cooperation | ✔ |

What is the penalty for not complying with the DSA?

The Digital Services Act will become law on January 1, 2024. Businesses breaching the DSA could face fines of up to 6% of their global turnover. They will have until March 6, 2024, to comply. By mid-February 2024, the measures will be expanded to smaller firms.

The DSA and content moderation

There is a need for more diligent content moderation and less disinformation. As content moderators, we understand that this is overwhelming, but there is a lot Besedo can help you with.

- Make it easy to report: Businesses must tackle the spread of illegal content and protect freedom of expression. Implement a feature for users to flag illegal content, ensuring swift platform action.

- Risk management: You need to analyze your specific risks and implement mitigation measures – for instance, to address the spread of disinformation and inauthentic use of your service.

- Localization: Under the Digital Services Act, digital platforms are tasked with moderating content to fit the unique laws and linguistic nuances of each of the 27 EU nations. Besedo’s multilingual AI offers an immediate solution, streamlining the path to compliance by handling localization necessities.

The right to appeal: Online platforms must let users contest content decisions. When users get content removed, they have 6 months to make their case.

How can my business become DSA-compliant?

There is no “one size fits all” solution for trust & safety—every online platform is unique, with its own goals and audience to match.

Besedo offers customizable options for tailoring content moderation to optimize your workflow and comply with DSA requirements.

While we are sure your Trust & Safety team is shifting in their seats, let’s look at what we will do together.

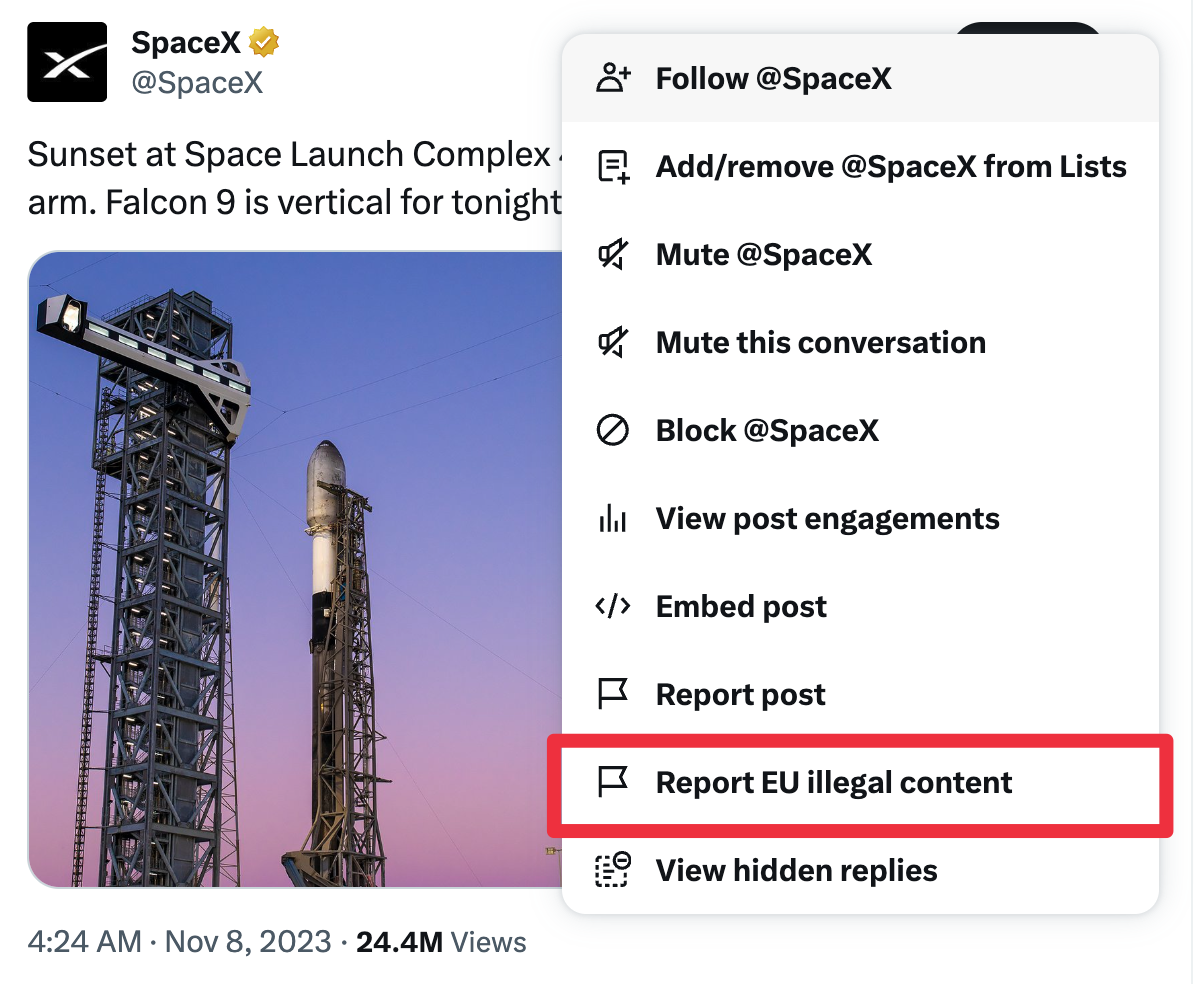

Easier reporting of illegal content

The DSA requires platforms to implement measures to counter illegal goods, services, or content online, such as a mechanism for users to flag such content.

Besedo gives online platforms the foundation and tools to create a user-friendly way to report illegal content. There is also a feature to inform infringing users about violations and reasons for removing their content.

If you’re paying close attention, you may have noticed that X, formerly Twitter, has a new functionality for reporting illegal content.

X is not the only platform implementing new and easy-to-use options to flag illicit content. For example, Apple, Pinterest, Facebook, Instagram, and TikTok provide new and easy ways to report illegal content.

Greater transparency in content moderation and options to appeal

We understand that your digital platform is more than just a space. Where your users’ ideas take flight, connections are made, and businesses thrive. Removing content inexplicably can frustrate users and backfire on your brand.

The new Digital Services Act (DSA) requires platforms to tell you exactly why the content was removed or if they limit your account.

We help you give your users a clear explanation, or a ‘statement of reasons’, for your actions.

Even platforms like Facebook and Instagram, which already explain their content rules, will have to cover more ground with these explanations. Plus, the EU Commission has kicked off the DSA Transparency Database to keep things transparent.

There is much to admire with this tool; it lets everyone check out the reasons behind platforms’ content decisions.

Zero tolerance on targeting ads to children and teens

The DSA permanently bans targeted advertisements to minors. Platforms have been reacting to this obligation: for example, Snapchat, Alphabet’s Google and YouTube, Meta’s Instagram, and Facebook do not allow advertisers to show targeted ads to underage users. TikTok and YouTube now also set the accounts of users under 16 years old to private by default.

Summary

As a platform owner, you must pivot to comply with the Digital Services Act by January 1, 2024. Ensure users can navigate content recommendations and report illegal material easily. Prohibit targeted ads to minors and communicate terms of service in plain, multilingual text. Introduce transparent moderation and straightforward appeal mechanisms. Compliance could save you 6% of global turnover. See this not as a legal hurdle but as a chance to foster user trust and stand out in the EU digital economy.

A good first step would be to send us a message; we’re happy to discuss whatever challenges you and your business face.

Ahem… tap, tap… is this thing on? 🎙️

We’re Besedo and we provide content moderation tools and services to companies all over the world. Often behind the scenes.

Want to learn more? Check out our homepage and use cases.

And above all, don’t hesitate to contact us if you have questions or want a demo.