Contents

Reddit, launched in 2005, has become one of the biggest sites on the internet entirely based on user-generated content. They’re even a public company now. But what kind of numbers are we talking about exactly?

Here are some quick stats for you: Since its inception, Reddit has accumulated over one billion posts and over 16 billion comments from its users (a.k.a. Redditors). They currently have over one hundred thousand active communities (a.k.a. subreddits).

No wonder companies like Google and OpenAI have signed deals with them to train their LLMs with Reddit data, because that’s a lot of content.

As you can imagine, that amount of user-generated content would be a challenge for anyone to effectively moderate in a timely and correct manner, so Reddit employs a layered, three-tiered approach to content moderation that is quite interesting to look at from a scalability standpoint.

But more about that later. First, let’s look at some more numbers from Reddit so we can put things in context.

We’ve dug through everything from shareholder letters, quarterly filings, their S-1 IPO filing (they went public in 2024), transparency reports and other relevant information to put this together.

How big is Reddit’s user base?

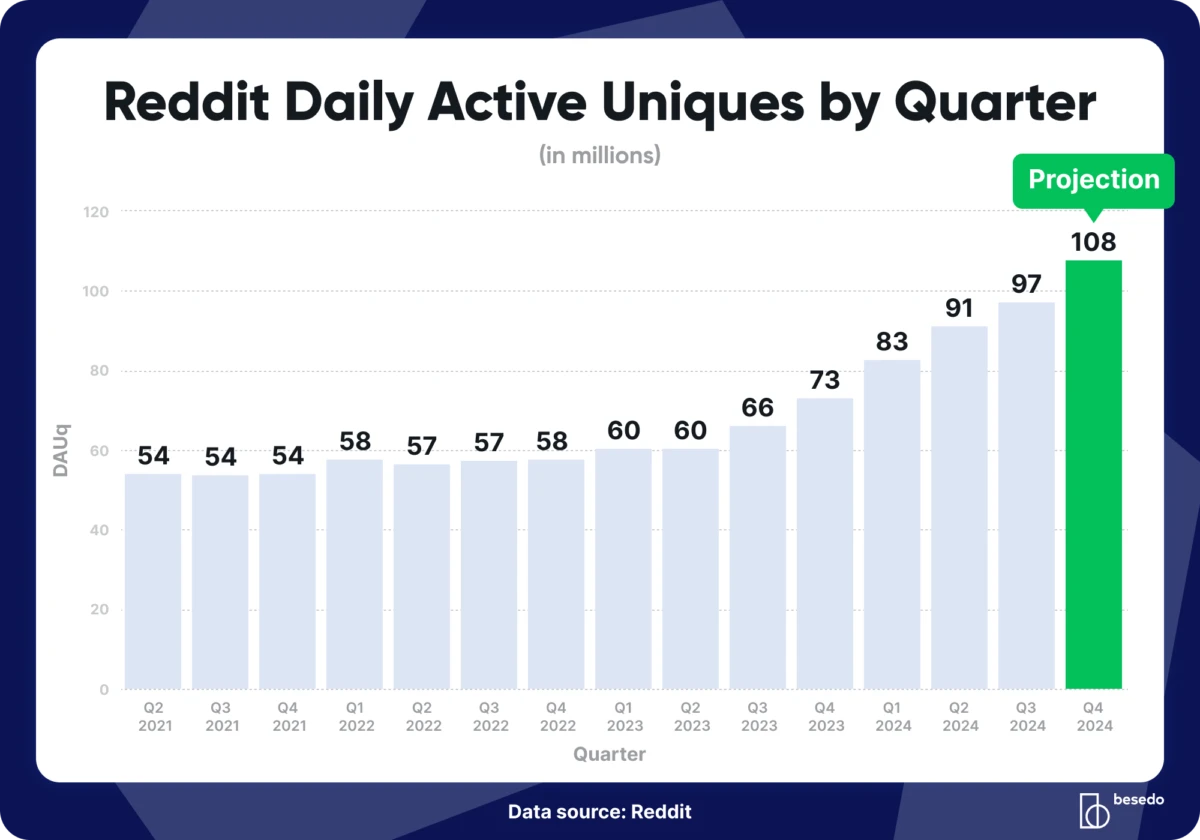

Reddit doesn’t use the term DAU (Daily Active Users), but instead prefers to report numbers using DAUq and WAUq, which stands for Daily Active Uniques and Weekly Active Uniques respectively. These numbers cover all unique visitors to the site (and Reddit’s app), and not just logged-in users.

The latest reported user number from Reddit is from their quarterly filing for Q3 2024, which was an average of 97.2 million DAUq. Some individual days the number of DAUq peaked at over 100 million.

How does this compare to previous years? We combined a few different reports from Reddit to get data all the way back to 2021 for some perspective. For fun, we also added a rough projection for Q4 2024 based on the year-over-year user growth in Q3.

(Reddit’s next quarterly report will give us the real Q4 number, but that’s not until February 12, 2025. We’ll see how close our projection got then.)

If you look closely at the above chart, it becomes pretty obvious that the user growth rate increased in the second half of 2023. We have a pretty good idea why, and we have an aside about that later in this article where we discuss Reddit’s growth engines.

Some other numbers about the Reddit user base:

- Weekly Active Uniques averaged 365.4 million in the third quarter of 2024.

- About half of Reddit’s users are in the United States. The rest are classified as international users from Reddit’s perspective. This split seems to have been relatively consistent the last few years as far as we could see. It’s likely that the international segment will grow faster in the future considering Reddit’s ongoing content translation initiatives (more on that later).

- About half of Reddit’s daily active uniques are logged-in users, as opposed to other site visitors and users who aren’t logged in. So if you are looking for Reddit’s equivalent DAU count (instead of DAUq) it should be around half of DAUq.

As you can imagine, these users produce a lot of user-generated content.

How much user-generated content is on Reddit?

Reddit is a “community of communities,” each with a forum called a subreddit. And there are a lot of them, over 100 thousand active subreddits as of this writing and hundreds of millions of posts each year.

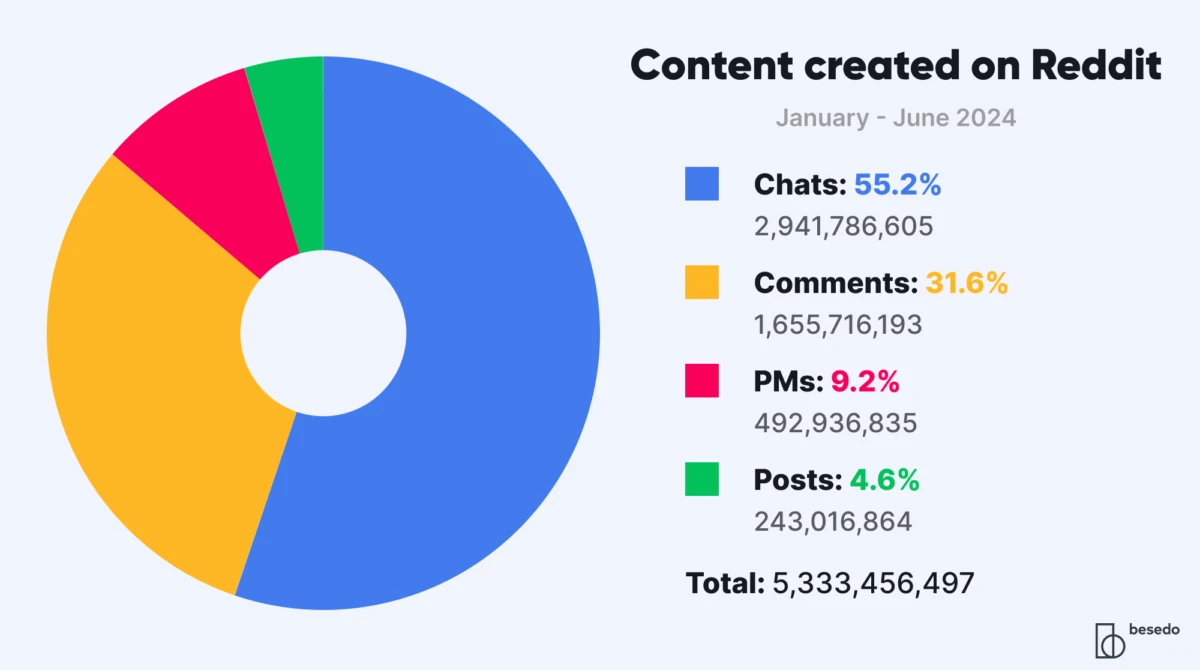

Reddit has four categories of user-generated content (UGC) in its reporting:

- Posts

- Comments

- Private messages (PMs)

- Chats

(Fun aside: Reddit didn’t initially have comments on posts. When Reddit added comments, the very first comment was that comments would ruin Reddit.)

In terms of volume, chat messages account for over half of the UGC on Reddit, followed by comments (on posts) making up around one third, and then around double the number of PMs versus posts. Posts make up less than five percent.

That’s the most recently reported distribution from Reddit’s transparency reports, which cover the first half of 2024. A total of over 5.3 billion pieces of content was created by their users in that period.

That number includes:

- 243 million posts

- 1.66 billion comments

- 493 million private messages

- 2.94 billion chat messages

Not bad for just six months. It also highlights how significant the community aspect is for Reddit, with so many post comments and chat messages. Lots of discussions going on.

Compared to the same period in 2023 (i.e. year over year), the amount of user-generated content created increased by 20.5%.

How Reddit content is moderated

As with any online platform with a lot of user-generated content, there needs to be active and continuous content moderation or else everything will soon turn into A Complete Mess (a highly technical term, that). Being proactive is key.

Reddit’s moderation model

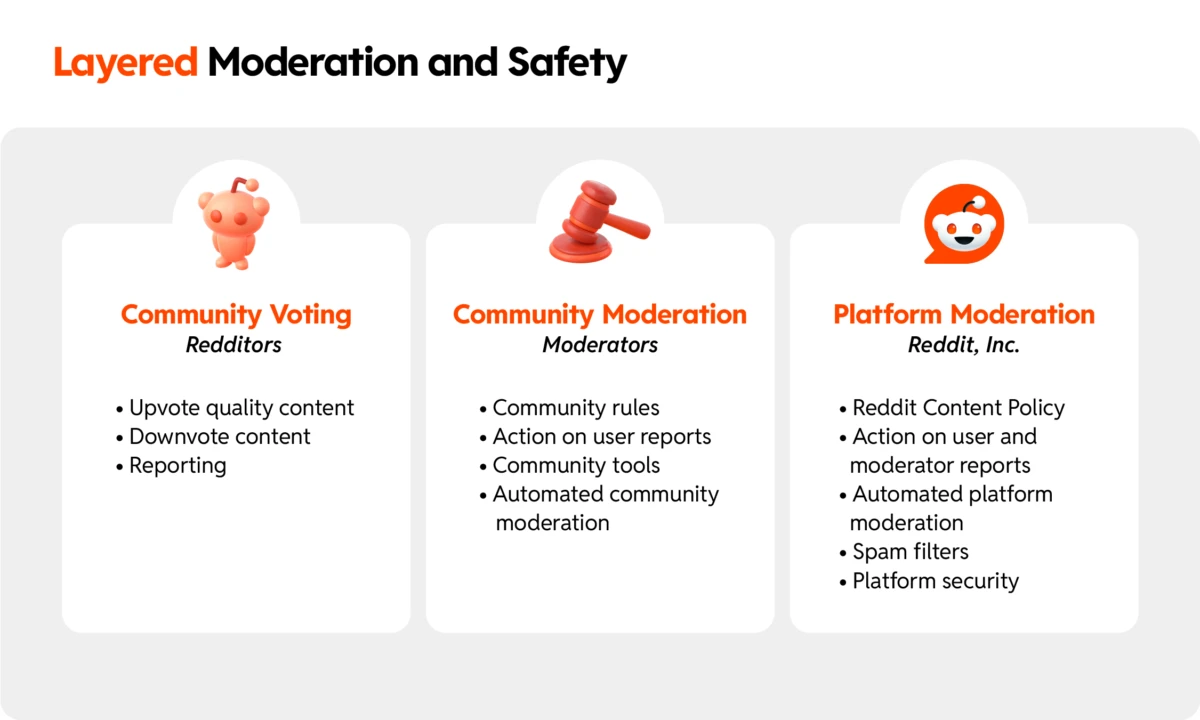

Reddit uses a three-tiered approach to content moderation that leverages its community in combination with automation and moderation tooling.

- Reddit’s platform-wide moderation rules. You can find them clearly written out on Reddit’s content policy page.

- Each subreddit’s own community rules, defined and handled by subreddit mods (often the people who created the subreddit).

- Reporting and up/down-voting by Redditors (the Reddit users of those subreddits).

Each subreddit has its own criteria for what is acceptable, which can be more or less strict, but not break Reddit’s overarching rules for content.

This helpful visual from Reddit that explains the hierarchy really well:

Reddit’s army of community moderators

According to its S-1 filing, Reddit had a daily average of 60,000 active moderators in December 2023. These are volunteers who help moderate their respective communities (subreddits) on the platform.

Let that number sink in.

Compare that to the total number of Reddit employees, which is around 2,000.

What about enforcement?

In addition to the rules that define what users are allowed and not allowed to do on the platform, Reddit’s content policy also includes how enforcement is handled:

We have a variety of ways of enforcing our rules, including, but not limited to

- Asking you nicely to knock it off

- Asking you less nicely

- Temporary or permanent suspension of accounts

- Removal of privileges from, or adding restrictions to, accounts

- Adding restrictions to Reddit communities, such as adding NSFW tags or Quarantining

- Removal of content

- Banning of Reddit communities

The rules in the content policy are straightforward as well. We give Reddit an A+ for clarity here. They have more indepth information in their help docs but this simple approach is great because it’s short, sweet, and to the point.

Reddit’s moderation robot, AutoModerator

To do anything at scale, you need to make automation as easy and powerful as possible. To help community moderators stay on top of things, Reddit has a built-in moderation system called AutoModerator, often just referred to as Automod.

It helps by allowing moderators to set up rules with various checks, filters and actions that trigger automatically when conditions are met. Without a system like this, large subreddits would quickly be overwhelmed.

Most content moderation solutions these days have something along these lines, and it’s a vital part of ensuring that user-generated content (and user interactions, etc) are kept safe, at least from obvious abuses.

Reddit content moderation numbers

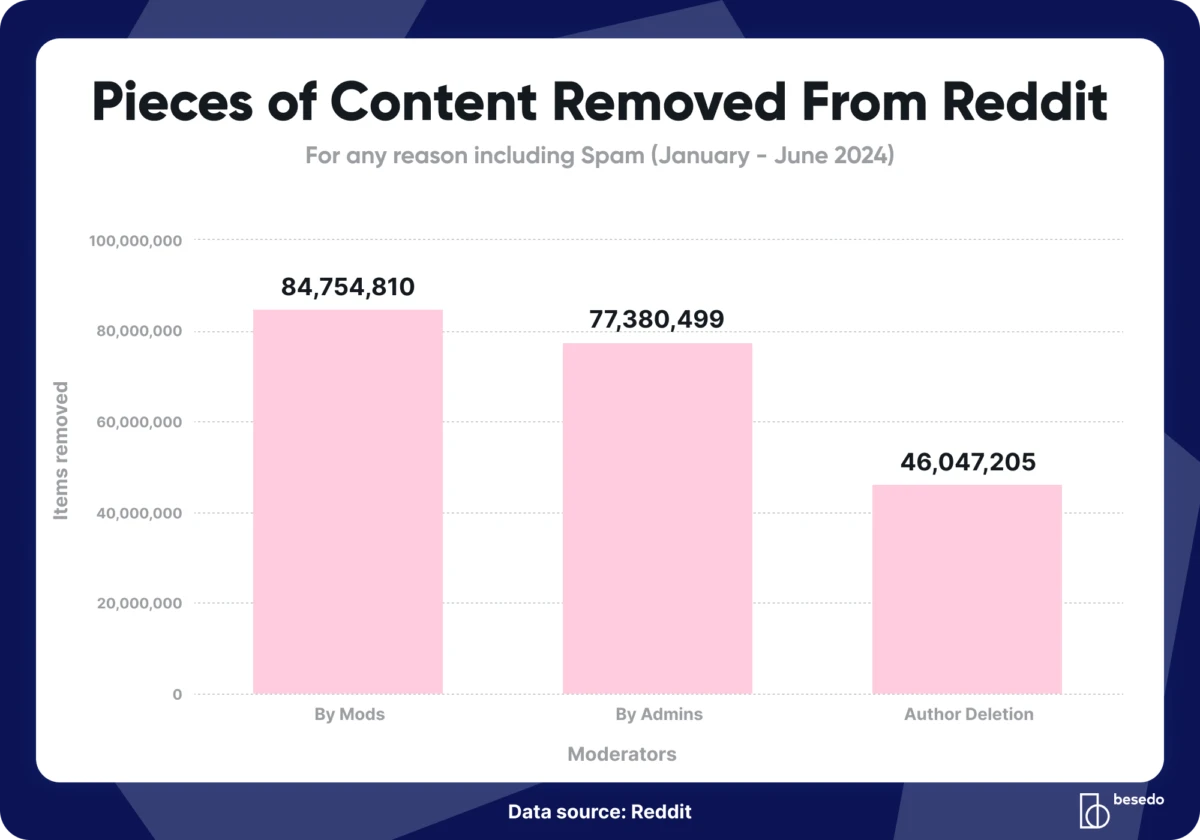

During the first half of 2024, more than 208 million pieces of user-generated content were removed from Reddit. That’s a bit over 3% of all new content created during that period.

- Reddit moderators removed 40.7%

- Admins removed 37.2%

- Authors removed 22.1% (after being warned, we suspect)

Spam is the most common content violation, with 66.5% of the removals being spam.

We should maybe clarify here what Reddit means when it’s talking about admins and mods:

- Admins are Reddit employees and responsible for the wider Reddit platform.

- Moderators (mods) are the users who manage and run their respective communities (subreddits).

As with any community, Reddit is rife with its own lingo.

Additional content moderation challenges ahead?

Reddit has big plans on growing its international user base, which is great. But it may also present quite a challenge and some serious growing pains.

More languages and cultural norms

Traditionally, Reddit has been mostly an English-language community, but this is slowly changing.

With a growing set of supported languages and regions comes different cultural norms. This can be both a blessing and a curse. It definitely adds complexity, but it’s also good to tie the world together a bit more and add diversity.

This international growth will most likely complicate content moderation, since multilingual, multicultural content moderation isn’t in any way easy. We know this because we handle this for many of our clients, and it takes significantly more care than moderating a “monoculture.”

We’re not saying that Reddit isn’t multicultural today, by the way. Just that it’s bound to become more multicultural with increased internationalization.

Additional scalability challenges

Another challenge for Reddit moving forward is sheer scale.

More effective and intelligent automation will be needed to handle platform-wide moderation while still being flexible enough to handle the different moderation norms for subreddits.

We are convinced that Reddit is on top of this, but it’s worth noting, for sure.

A more attractive target for spam and disinformation

And finally something that isn’t in any way new or unique to Reddit, but will be a growing problem: With more size and influence, the platform becomes an increasingly desirable target for various forms of spam content.

This spam can even find its way into Google’s index, which is unfortunate for everyone involved (except the spammers).

For examples on how spam has gotten through Reddit’s moderation layers, look no further than this recent study by Glenn Allsop documenting some of these problems as late as mid 2024. That study isn’t just about Reddit, but since Reddit content is so prominent in search results now, it’s a good example of what can go wrong.

Attempts at spreading disinformation and other types of intentional abuse and fraud are likely also going to increase.

A note on Reddit’s growth engines

While we’re talking about Reddit’s growth, it’s worth a brief aside looking at why they are growing so fast now. Last year (2024) looks to have been an amazing year for them, and something clearly changed in 2023.

If Reddit continues to grow at its current pace, in 2025 they will add roughly another 50 million DAUq, landing a good bit north of 150 million daily users/visitors. They will most likely have ended 2024 with an average over 100 million.

So what’s behind this?

Let’s get the biggest mover out of the way immediately; a lot of Reddit’s recent growth comes from Google. In fact, the boost from search traffic has been remarkable.

Google made significant changes to its algorithms starting in the fall of 2023 that give user-generated content much more weight, and Reddit has been one of the primary beneficiaries. That, in addition to direct content deals between Reddit and Google are definitely worthy of note.

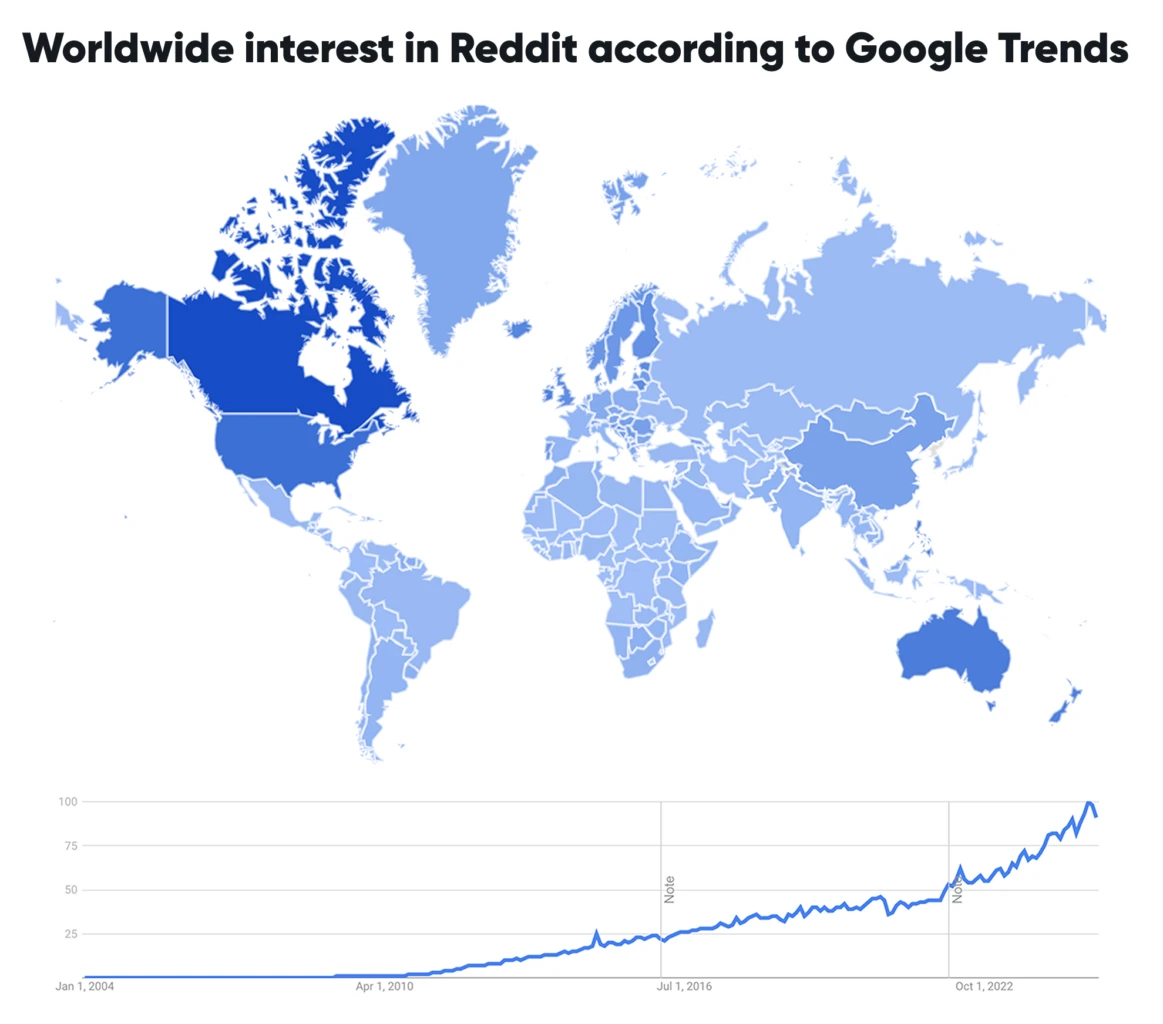

Here’s a pretty telling chart from Semrush showing Reddit’s estimated worldwide organic search traffic over time:

Note how this directly correlates with the boost to Reddit’s growth rate in Q3 2023 (shown earlier in this article). That’s when the hockey stick moment happened.

Organic traffic took a slight hit early in 2025 but it remains to be seen if it’s temporary or not.

It’s also quite clear that Reddit has gone all-in on SEO and is trying to cover as many languages as possible, not just English. The company started translating its content at the beginning of 2024, first with French but now adding several other languages like Spanish, Portuguese, Italian, German, and many others.

Since Reddit is using machine translation (AI / LLM-based), translations are automated and should be able to scale and be indexed by search engines, showing up in search results in even more markets.

This will likely be a big driver for Reddit’s international growth moving forward, at least as long as Google continues to give added weight to user-generated content and Reddit specifically. It’s likely to widen Reddit’s market in general as it becomes a more multilingual platform.

It’ll be interesting to see what this map looks like in a few years if Reddit’s translation initiatives prove truly successful. The current trajectory shows no signs of slowing down, does it?

It should be noted that that map is normalized. It shows interest as the share of all queries in each region, so it’s roughly “adjusted for size” and not a reflection of absolute numbers.

Also, what’s up, Canada? You seem incredibly interested in Reddit. 😃

Reddit is also leaning into AI and leveraging their existing knowledge base (i.e. content produced by its user base) with the recently announced Reddit Answers. It remains to be seen how effective these new initiatives will be in the long run, but centering efforts around their community like this makes perfect sense.

Learnings from Reddit’s approach to content moderation

Trust and safety is a beast to handle well and keep users happy, safe, and engaged.

While no content moderation solution is ever 100% effective—sooner or later something will slip through that shouldn’t, especially at scale—it always helps to adapt the solution to your specific circumstances and opportunities. A customized solution is your friend.

So what can we learn from how Reddit has done it? Here are a few observations:

- Segment your user-generated content. You don’t need a single pipeline for everything. What you need is to efficiently segment content and use the most effective and suitable moderation methods for each type (and circumstance, for example if it needs to be real time or not).

- Make it easy for users to help you with basics. Do you have a large, involved user base? Help them help you, so to speak, even if it’s something as simple as making it easy to flag content and report users that behave badly, upvote or downvote, etc.

- Empower community leaders. When you run a community, or at least have community elements, empower the community to moderate itself as much as possible (with tools and clear guidelines).

- Clearly defined rules. Have your line in the sand with a comprehensive set of site-wide rules regarding what is and isn’t allowed on your platform. And don’t have them hidden away where users can’t find them.

- Clear enforcement rules for abuse. Consequences for bad behavior shouldn’t come as a surprise. “If X happens, we will do Y.”

- Automation is absolutely vital for scale. We’ve seen this over and over again with the clients we work with. You need to automate. If you can’t proactively moderate the vast majority of your user-generated content with filters and AI, that process will slow to a sirupy crawl. Human moderators will likely still be necessary for edge cases, but you shouldn’t need an army of them.

We’d also like to mention that the good folks over at Reddit should be commended for working really well with documentation for their users and voluntary moderators.

For example, this is their help center page for community moderators, where you can easily drill down and learn the specific details you need. It’s basically a classic knowledge base, but well populated with relevant information and adapted to Reddit’s style and needs. It has overarching information, links to moderation-related communities, a great set of FAQs, various tips, links to Reddit’s system status, and more.

To always be helpful is such a good mantra to keep in mind, isn’t it?

That’s it for this little look at Reddit’s content moderation stats and practices. We hope you found it as interesting as we did.

For context, content moderation is near and dear to us. It’s our industry, what we do here at Besedo, 24/7. So we love looking into how various platforms handle trust and safety, especially at scale like this. There’s always something to learn.

And we think Reddit is a pretty cool place on the internet, so there’s that, too. 🙂

Data sources: Reddit transparency reports. The quarterly reports and shareholder letters released since their IPO. Their S-1 filing. This amusing little retrospective about the early days of Reddit. And some other bits and pieces from here and there. Reddit really should be commended for the amount of data they provide to those who are willing to dig a little deeper, like we did here.

Ahem… tap, tap… is this thing on? 🎙️

We’re Besedo and we provide content moderation tools and services to companies all over the world. Often behind the scenes.

Want to learn more? Check out our homepage and use cases.

And above all, don’t hesitate to contact us if you have questions or want a demo.