Contents

The Paris 2024 Olympics are just around the corner. A quick Google search about Olympic scams will likely result in articles about scandals and controversies by athletes. But while the world’s top athletes prepare to dazzle us, scammers are gearing up for their own event – targeting your platform and users.

Whether you’re running a marketplace, a sharing economy service, or any online platform, they’re coming for you. The trust and safety of your users are at stake. So, how can you fight back?

Ticket scams: Stay one step ahead

One of the most common scams is the sale of fake tickets. More than 13 million tickets are on sale for the Olympic and Paralympic Games, making them a prime target. Scammers create convincing websites that mimic official ticketing sites with Olympic logos and professional designs. Victims purchase tickets only to find out later that they don’t exist.

For example, during the Beijing 2008 Olympics, numerous fake ticket websites were reported, tricking consumers out of their money without delivering any tickets.

The French authorities have already identified 44 fraudulent sites selling fake tickets for the Paris 2024 Olympics. This early detection highlights the ongoing risk and the need for consumer and platform owners’ vigilance.

Recently, online marketplace company Viagogo ‘mistakenly’ listed the resale of England football match tickets for the summer’s Euro 2024 tournament in Germany. This is even though the resale of football tickets is illegal in the UK. When there are clear rules in place, content moderators will help your platform and prevent this from happening in the first place.

Okay, so what can we do to protect our platforms and, ultimately, the end user?

Naturally, we want to talk about something near and dear to us.

Content moderation

We at Besedo want to help and know from experience that content moderation is your secret weapon against fraudulent content on digital platforms. By using advanced technology and dedicated moderation teams, you can spot and eliminate scams before they affect your users.

There are, of course, several ingredients to this not-so-secret sauce. But bear with us while we explain.

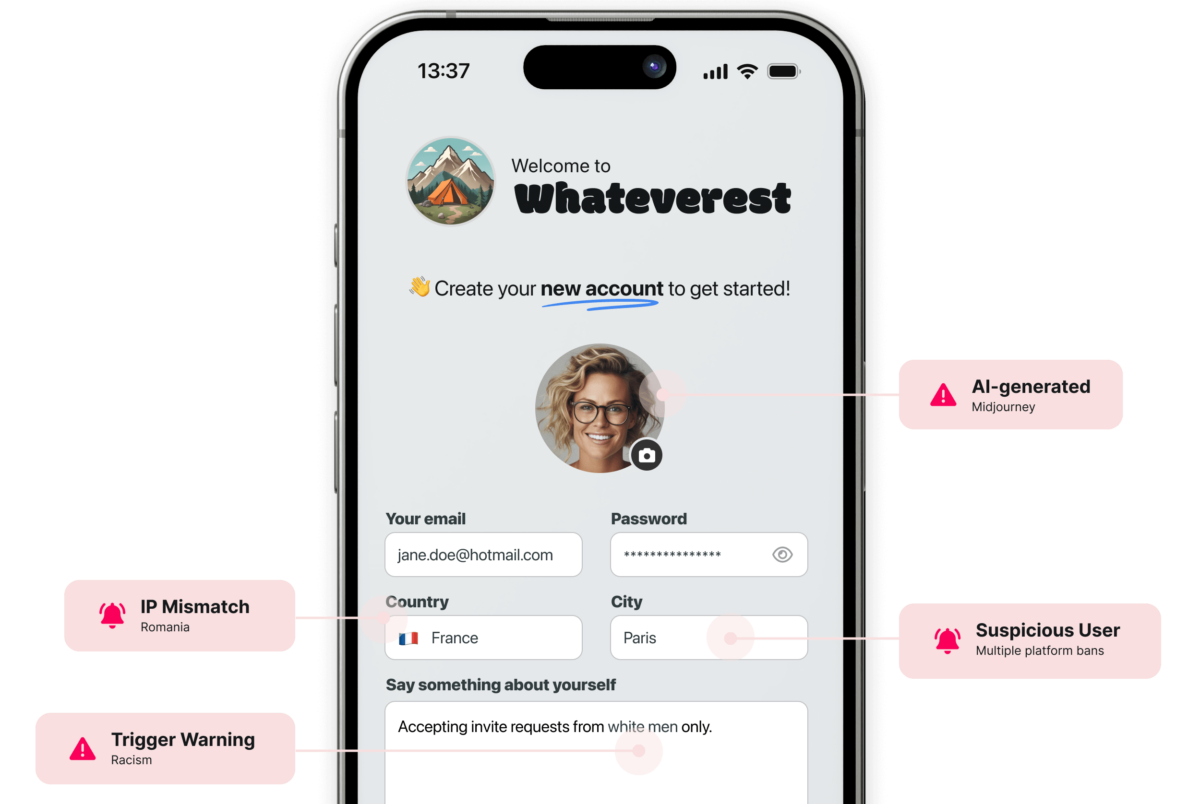

It starts with account creation

Content moderation isn’t just about flagging inappropriate content. It involves sophisticated machine learning algorithms that analyze online behavior patterns to detect anomalies. These algorithms can spot suspicious activities quickly, like a high volume of logins from multiple locations, indicating potential fraud.

By examining historical behavior, these systems can identify patterns consistent with scam activities, allowing you to take preemptive action against fraudsters.

Metadata and IP address analysis

Moderators also examine metadata, such as IP addresses, to uncover inconsistencies. For example, suppose someone uses an old Android phone and claims to rent out a beautiful villa in Paris during the Olympic Games.

However, content moderators detect the user has an IP address in Nigeria. That’s somewhat of a red flag, right?

Platforms can scrutinize metadata to verify user claims and weed out those who don’t pass the smell test. This scrutiny ensures that only legitimate users can operate on their platforms.

Leveraging machine learning and AI

Machine learning and AI are game changers for content moderation. These technologies swiftly process vast amounts of data, accurately identifying and flagging fraudulent accounts.

AI-driven tools can detect repeated use of the same images across different scam listings, helping you shut down fraudulent accounts quickly and efficiently. AI can be used to fight back against AI, you know. The level of sophistication for AI-created photos today is really astonishing – but it’s also, in part, illegal.

But the secret sauce is how you train your AI for things it has never heard of. No platform could have trained proper models for discussing COVID-19, vaccines, and the like in 2020. The same goes for the summer Olympic games, which occur once every four years.

When these things happen, and they happen faster than we anticipate, it’s good to have someone like Besedo who can quickly remedy the situation and have rule-based automation up and running instantly.

Proactive measures and real-time alerts

Effective content moderation involves being proactive. Setting up real-time alerts for suspicious activities in your chats allows moderation teams to act immediately.

You can choose to filter out specific terminology if you want; there could be chatter about moving off your platform, payment, harassment, extortion, and a whole range of other things that clearly violate your terms of service.

Final thoughts

Sure, scammers can do a lot to contaminate your platform, but let’s end on a more positive note: There’s help to get. You don’t have to do this on your own; it’s probably not even smart to attempt to fight every battle yourself. The first action you can take is to reach out to a content moderation service like Besedo and have a friendly conversation with no strings attached. We’re happy to share our expertise.

Ahem… tap, tap… is this thing on? 🎙️

We’re Besedo and we provide content moderation tools and services to companies all over the world. Often behind the scenes.

Want to learn more? Check out our homepage and use cases.

And above all, don’t hesitate to contact us if you have questions or want a demo.