Contents

Every forward-looking business considers how to implement automation strategies throughout its different departments. Unsurprisingly, content moderation traditionally required a lot of manual work and has been one of the first areas turned upside down by the emerging automation trend.

But smart business owners also realize that for automation to be efficient, it must be applied correctly. Even though there are considerable cost-savings to be had by adding automation to your moderation process, you will only reap the monetary benefits if you choose the right approach.

Bill Gates famously said:

“The first rule of any technology used in a business is that automation applied to an efficient operation will magnify the efficiency. The second is that automation applied to an inefficient operation will magnify the inefficiency.”

We agree with Gates and will also add:

“The third rule is that the wrong automation type applied to an efficient or inefficient operation will be very costly both to resources and potential to brand image.”

Why? Because each type of automation has its strengths and weaknesses, which will benefit businesses differently depending on volumes, budget, and growth stage.

We will go through these characteristics now.

Filters

Filters were part of the first wave of automation efforts applied by site owners desperate to manage content and keep users safe.

In 2023, filters might seem like an inflexible and rigid approach to moderation. However, they are still a unique content moderation tool, as a cheap entry point and an additional level working alongside AI to keep your site clean.

How do automation filters work and when are they most effective?

If applied and maintained correctly, filters can be pretty powerful. In our tool, filters, or rules as we call them, can be set up to be pretty comprehensive. You can design them so they look at the price, IP, description, or any other data field available. On top of this, our tool offers the option to create lists, allowing easy updates without messing with the rule structure.

Filter automation is great for cases where you want to target precise information like user data.

Filters can add a relatively inexpensive first layer of defense against scammers and other undesirable users. Filters can be created to target unwanted content that follows a recurring pattern, which can be precisely described and thus formulated as a rule.

Another benefit of filters is that they are straightforward to change and set up. You don’t need large datasets to train the filters; you enter a new rule based on your needs and are ready to go.

The drawback with filters is that you continuously have to update them, which means you are often in an open arms race with scammers and other users intent on misusing your site.

Generic Machine Learning models

Most offerings for automation on the market today, which aren’t filters, fall into this category. Generic machine learning models are algorithms created from general data and targeted at issues that are common to a wide variety of sites.

How do generic Machine Learning models work and when are they most effective?

The difference between generic and tailor-made machine learning models is primarily in the datasets used to train the algorithms. Whereas a tailor-made solution will be built from your specific data, a generic model is taught from an artificially created dataset to cover all variables of the problem it is trying to solve.

A generic model meant to deal with swear words would, as such, be presented with a dataset containing 50% of documents full of swear words and 50% which were clean and okay.

The main problem with generic models is that they tend to be very broad, resulting in low accuracy rates.

One of the challenges in building datasets to train generic machine learning models is that there is no general agreement on what constitutes, for instance, foul language. Different communities will have different levels of sensitivity towards profanities; sometimes, whether a word is terrible can even be a matter of context. With a generic model, you will get an algorithm tuned to what is generally accepted as swearwords, which might not fit your site.

If your community isn’t primarily specialized, generic machine learning models can be good for getting the worst content off your site quickly and at a very affordable price. Make sure you are aware of the limitations and comfortable knowing that some lousy content will slip through and some genuine posts will get caught.

Generic machine learning models are bound to create many more false positives than tailor-made algorithms and customized filters.

Tailor-made Machine Learning models

Unlike generic models, tailor-made machine learning algorithms are trained using data specific to your site.

This allows the model to operate at a very high accuracy level. If we take the example of swearing, we avoid false positives from words generally labeled as profanity but acceptable in the context of your community, which is important for effective profanity filtering.

To illustrate the point, let’s say that you are running a site for medical professionals where they can buy and sell equipment while also discussing their trade and exchanging knowledge. On such a site, using words for genitalia wouldn’t be out of place or considered profane. Posts containing those words would very likely get caught by a generic model. A tailor-made algorithm would know that those posts are generally allowed through, whereas those containing the f-word are still rejected as profane.

How do tailor-made Machine Learning models work and when are they most effective?

When we create tailor-made machine learning models at Besedo, we work closely with our clients to ensure the best possible outcome. The bigger the dataset our client has available, the more accurate a solution we can create for them. Over time with tailor-made solutions, we can reach an accuracy of 99% with an automation rate of 90%, utterly unrivaled by either generic models or filter automation.

A tailor-made machine-learning solution can reach high accuracy and automation levels but requires significant data volumes. If you run a small website and are just starting, you may not have enough valid data to train the algorithms efficiently. In such cases, your better choice might be filters or a generic machine-learning solution.

Choosing the correct setup for you

Now that we have been through the different automation solutions, it is time to find out which fits your needs best.

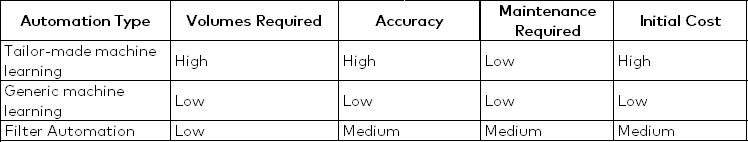

At this point, it probably comes as no surprise that it comes down to volumes, accuracy, and budget.

In the table below, we are illustrating elements that impact the choice of automation type. Based on this matrix, you can find a good guideline for which automation type will work best for your business.

When to choose a tailor-made model

If your monthly volumes are 100k or more, then a tailor-made machine-learning model is the way to go. You have sufficient volumes, so it is possible to create exact models.

Furthermore, if you are currently moderating your content manually, you will make your money back very fast, even with an investment in a tailor-made solution. Tailor-made machine learning models will allow you to manage your content accurately.

When to choose filter automation

If your volumes are less than 100k and avoiding false positives is very important to you, then filter automation makes sense for your business. You can tweak the wordlists and rules until they match your site rules, and you can even apply a manual moderation to review items caught in the filter to ensure a higher accuracy level.

When to choose generic Machine Learning

If your volumes are less than 100k items per month and you can live with an accuracy as low as 75%, generic machine-learning models might be a good solution. Suppose you have to choose between no moderation and a generic algorithm. In that case, it is generally better to apply some level of moderation as long as the solution misses too much content published by genuine users.

It might even be that the best solution for you is a combination of the three in a tailored setup to fit your business’s specific and varied challenges.

Are you still in doubt about which automation type fits your business best? One of our solution designers will be happy to analyze your business needs with you to determine what option will benefit you the most.

More on the Besedo blog

See allSee all articlesThis is Besedo

Global, full-service leader in content moderation

We provide automated and manual moderation for online marketplaces, online dating, sharing economy, gaming, communities and social media.