Contents

Recently Facebook came under fire for removing a Pulitzer prize-winning photo titled the Napalm Girl. The picture hit their moderation filters because it features a naked girl, and Facebook has a strict no-nudity policy. So strict that they have earlier been in trouble for removing pictures of breastfeeding women.

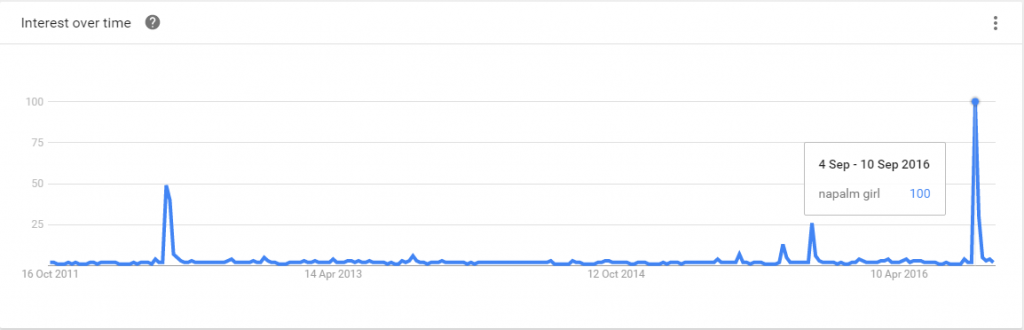

The incident of Facebook removing Napalm girl went viral, and at the time of writing this, it still shows up as the third result in Google despite the fact that it occurred over a month ago. The search for the term has also spiked to an all-time high, as seen in the screenshot from Google trends below:

Search spike for the term “Napalm Girl” after the Facebook moderation incident

Whatever you feel about Facebook’s reasoning behind removing the picture, you definitely want to avoid this kind of bad media attention.

On the other hand, you don’t want to end up at the other end of the spectrum where the media is berating you for providing a digital space for child pornography exchange.

So how do you handle this very sensitive balance act?

Moderation is censorship

Let’s first address a claim that has come up a lot in the wake of the Napalm Girl case. Is moderation censorship?

According to Merriam-Webster, a censor is: “a person who examines books, movies, letters, etc., and removes things that are considered offensive, immoral, harmful to society, etc.”

That sounds an awful lot like the job of a content moderator and the purpose of content moderation, doesn’t it?

With that definition in mind, moderation is censorship, but there is a but. The word censorship is laden with negative connotations mostly because in the course of history, it has been used to oppress people and slow down progress.

But censorship in the form of moderation is not always bad. It just has to be applied right. To do so you need to understand your target audience and act in their best interest (Not what you think their best interests are, what they really are).

Not convinced? Let’s consider the case of a chat forum frequented by young adults. If someone starts harassing another user, should we not step in? In the spirit of free speech and to avoid censorship, are we prepared to allow cyber bullying to rage uncontrolled?

What about discrimination? Should homophobic or racist statements be allowed online to appease a false understanding of freedom of speech? Will that do anything positive for our society? Not likely! So there are very valid and altruistic reasons for moderating or censoring, if you will.

And that is not even touching upon the issue of legality. Some things have to be removed because they simply aren’t legal and you, as the site owner, could be held liable for inaction.

How to avoid users feeling censored

You were probably on board with all this already, but the real question is, how to get the buy-in from your users?

You must ensure that you and your site adhere to these five key elements.

- Understanding of your target audience and their expectations

- Transparency in rules and intent

- Consistency

- Managing support and questions

- Listen and adapt

Understand your Target audience

This is super important because your moderation rules should look significantly different if you run a dating site instead of a chat forum for preteens. And even within the same site category, there could be huge deviations. Moderating away swear words would probably feel like strict censorship on a general dating site, but it would make complete sense if your target audience is fundamental Christians.

Transparency in Rules and Intent

It has always been a source of confusion to us why sites would choose not to share their reasoning behind the rules and policies they set in place. If those rules are created with the best interest of the target audience in mind, then sharing them should show users that they are understood and listened to.

But the fact is that very few companies are wholly transparent with their moderation process and policies.

The problem with that is that if users do not understand why certain moderation rules are enforced, those rules will feel a lot like censorship and conspiracy theories tend to start. It is a great exercise to go through all your current moderation policies, check which of them you would not feel comfortable sharing and really investigate why. Chances are the rules you wish to keep hidden are probably not in place for the benefit of your users and if that is the case you will have a hard time getting buy-in. And when you remove something, you can easily refer to that clause in your policies.

Now some rules may be in place to keep the company profitable, but with a proper explanation that can easily be explained to users so they understand that without these rules in place the site would close down.

Consistency

The annoyance with Facebook for banning breastfeeding pictures caught fire because of their inconsistency in approach to pictures of women showing skin. On the one hand, they banned women in the very natural act of feeding their babies. On the other hand, they allowed women in skimpy bikinis with (from a user point of view) no hesitation.

If you don’t allow books on Adolf Hitler, then you need to ban all books about leaders who have committed genocide, so Stalin biographies are a no-go as well.

Managing Support and Questions

As anyone who works within customer care will know, how you handle customer feedback and complaints is vital to achieving customer satisfaction.

The same goes for getting user buy-in. When you remove content posted by a user, they must understand why and that you answer any questions they may raise clearly. You can even reference your rules. They are clear and transparent, after all, right?

Listen and Adapt

You can’t predict the future! This means that you will never be able to account for all possible scenarios which might arise when moderating your site. New trends will pop up, laws might change and even a slight shift in your target audience could cause a need to review your policies.

The Facebook/Napalm girl case is again a great example of this. On paper, it might make total sense to ban all photos of nude children, but in reality, the issue is a lot more complex. Try to understand why your users would post things like that in the first place. Are they proud parents showing their baby’s first bath for instance? Then consider what negative consequences it could have for your users and for your site to have pictures like this live.

If you find that your policies are suddenly out of tune with user goals, laws, and your site direction, then make sure you adapt quickly and communicate the chance and the reasoning behind these to your users as quickly and clearly as possible.

From word to action

Of the five key elements to secure user buy-in for moderation, consistency is the hardest one to achieve continuously.

It is very easy to set the goal to be consistent, but a whole other thing to actually accomplish consistency. Particularly in moderation as the issue is made more difficult by multiple factors.

- Rules and policies are often unclear, leaving space for many gray zone cases.

- A lot of moderation is done by humans. Each person might have a slightly different interpretation of the rules and policies in place.

- Moderation rules need to be updated continuously to keep up with current trends and event, this is time-consuming and rules are as such often outdated.

- Moderating well requires expert knowledge of the field. Achieving expert level on any topic takes time and as such the expertise required for consistent moderation is not always available.

The best way to achieve consistency in moderation is to combine well thought through processes and training of your human expertise with machine learning algorithm ensuring that the right decisions are taken every time.

So is moderation censorship? We’ll leave that to semantic experts to answer, but as content moderation experts we boldly claim that moderation is needed today and even more so in the future. To get your users on board, ensure you cover all the 5 key elements for user buy-in. Doing that will ensure that moderation is something your users will appreciate rather than a question.

Related articles

See allSee all articlesThis is Besedo

Global, full-service leader in content moderation

We provide automated and manual moderation for online marketplaces, online dating, sharing economy, gaming, communities and social media.